Driver Head Tracking Based on Video Camera & AI Algorithms

In recent years, key players in the automotive and transportation markets have been developing systems that solve the problem of real-time monitoring & analysis: they control the physical condition and concentration of drivers to prevent accidents. Dangerous states of drivers or personnel at work can be identified by several indicators: blink rate, body movements, and head position.

Not so long ago, axial orientation sensors, including gyroscopes, were used to track the head position of a car driver or production personnel. Now, AI algorithms allow us to solve this problem with just a dash camera and cameras vision systems. This system will work autonomously, without an internet connection, as AI on Edge. In this article, we will tell you how we develop this type of hardware and software solution for our customers.

In the video: the leader of our Automotive Unit is testing the system.

We have developed a computer vision system to estimate head position and analyse driver fatigue, specially commissioned by a European engineering company. Its purposes are to reduce the risk of car accidents, chronic fatigue, and possible neck pain in truck drivers.

These face tracking systems are gaining popularity and will be regulated at the legislative level soon. In the EU, all produced models of cars must be equipped with DMS or driver monitoring systems from 2025, which will help reduce the risk of accidents.

We implemented this engineering task based on our off-the-shelf module on Ambarella chips, which we had already used in another project to develop a camera with a facial recognition function.

In another project, we tracked the orientation of the driver's head toward various objects in the car, such as the windshield. This data helped us to assess the driver's concentration on the road and various anomalies: sudden malaise or external interference.

One of the subtasks of computer vision that needs to be solved with such projects is to identify key points on the human face: eyes, nose, ears, etc.

To analyse the head position, we need at least 6 key points in the 3D facial image.

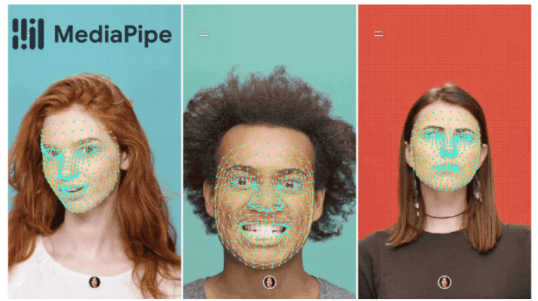

Current models available on the market are based on ultra-precise neural networks and identify a large number of unique points with high accuracy. For example, the MediaPipe Face Mesh solution "recognises" 468 points and uses them to create a 3D face map.

MediaPipe Face Mesh with up to 468 points to convert face image to 3D model. Source: github.com

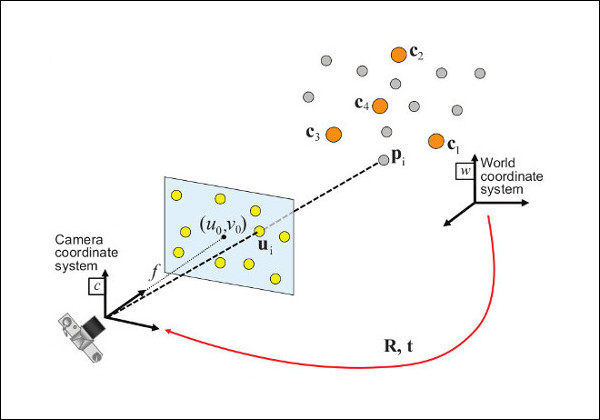

Knowing at least 6 points and some other parameters, such as camera resolution, it is possible to apply the PnP (pose estimation problem) algorithm. This algorithm allows translating a 3D projection of the real world into a 2D picture with minimal losses, taking into account the set of points to track the position of objects.

The PnP algorithm comes down to the solution of matrix equations, where the unknowns are the rotation and transfer matrices between the world system coordinate and the camera coordinate system.

The algorithm can be divided into two stages. In the first one, using such camera parameters as optical center and focal distance, we can restore the 3D coordinates of our key points, which are determined in the image.

In the second stage, the rotation and transfer matrices are defined according to the set coordinates and a given 3D face model.

There are some other implementations of the PnP algorithm that use the optimisation approach, but in both cases, the result is the found rotation and transfer matrices between the world system coordinate and the camera coordinate system. Knowing them, it is possible to determine the orientation of the driver's head relative to the camera.

The principle of the PnP algorithm is shown in the figure below:

The principle of the PnP algorithm. Source: OpenCV.org

Conclusion

Our software algorithm for estimating the driver's head position is a turnkey solution that can be used not only in driver alertness monitoring systems but also in retail and industry — wherever you need to monitor human behaviour to ensure efficiency and safety. And it will work as AI-on-Edge — it means autonomously, with all the data processed without connection to the Internet.

Our engineering team accelerate the implementation of such AI solutions based on hardware platforms from the world's leading vendors. With our experience working with chips from Renesas, Ambarella, Jetson, and Google Coral, we can provide autonomous operation of face recognition API and other AI algorithms on almost any platform for our customers.

If you want to discuss the possibilities of implementing new AI-based features into your monitoring and security systems — feel free to write to us, we would be happy to share our experience.