When Machines Respond to Thought: Neuro-Interface Embedded Modules in Industrial Automation

For decades, the idea of controlling machines directly with the human brain belonged to science fiction. Today, neuro-interfaces — once limited to medical research and assistive technologies — are moving into industrial environments.

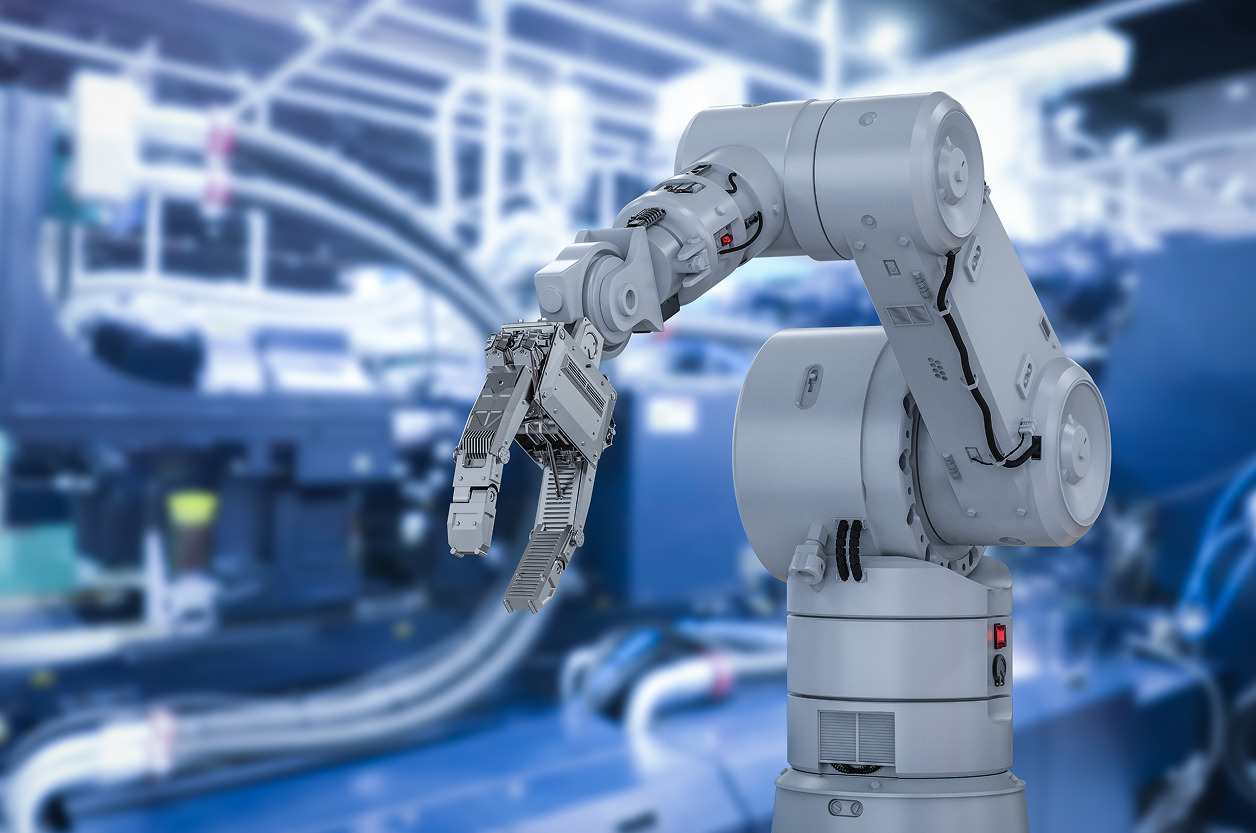

From robotic manipulators that respond to operator intent to exoskeletons assisting factory workers, the boundary between the nervous system and embedded electronics is becoming increasingly thin.

The enabler of this transformation is a new generation of neuro-interface embedded modules — compact hardware units capable of capturing, decoding, and acting upon neural signals in real time. These modules combine the precision of analog front-end design, the power of AI inference at the edge, and the robustness required for industrial-grade operation.

Let’s explore how this emerging field is changing human-machine interaction, what technologies make it possible, and how engineers are integrating brain signals into automation workflows.

The evolution of brain-machine interfaces

The basic principle of a brain-machine interface (BMI) is simple: capture brain activity, decode its meaning, and translate it into a machine command. But executing this in real time, safely, and reliably — especially in industrial settings — is far from simple.

Early neuro-interfaces focused on medical applications, such as restoring communication for paralyzed patients or controlling prosthetics. These systems were large, slow, and often invasive. The industrial sector, however, requires something very different: compact, wearable, and robust modules that can integrate seamlessly with existing control systems and communicate via standard industrial protocols.

The convergence of low-noise analog front ends, edge AI processors, and embedded wireless systems has made that possible. Now, neuro-interface modules can fit into a helmet, a headband, or even the operator’s uniform — turning human intention into control input for robots, vehicles, or assembly systems.

How neuro-interface embedded modules work

A neuro-interface embedded module typically performs five major functions:

- Signal acquisition – capturing electrical activity from the scalp (EEG) or muscles (EMG) using dry or semi-dry electrodes.

- Signal conditioning – amplifying microvolt-level signals, filtering out noise, and digitizing them with high precision ADCs.

- Feature extraction – identifying characteristic neural patterns (e.g., event-related potentials or frequency bands associated with focus, stress, or movement intention).

- Classification and interpretation – running AI or ML algorithms that map these patterns to specific actions or control states.

- Output interfacing – sending commands via Ethernet, CAN, UART, or wireless protocols to connected PLCs, robots, or embedded controllers.

Most industrial-grade neuro-modules are built around specialized SoCs or FPGAs that combine low-latency digital signal processing with neural inference cores — enabling local decision-making without relying on cloud processing.

The role of embedded hardware in neuro-interfaces

In industrial environments, latency, determinism, and reliability are critical. Unlike research-grade systems that can tolerate delays, an industrial neuro-interface must act instantly — often within tens of milliseconds — to feel natural and safe.

This is where embedded hardware makes the difference. By processing neural signals directly at the edge, these modules:

- eliminate dependence on external computers,

- minimize communication lag,

- and maintain full functionality even in isolated networks.

FPGAs and specialized microcontrollers handle real-time filtering and feature extraction, while embedded AI accelerators perform inference. The result: direct human control over machines with reaction times approaching reflex levels.

In some systems, neuro-modules are even integrated into the machine’s existing control hardware — creating closed loops where the operator’s intention directly modifies motion trajectories, tool pressure, or robotic assistance levels.

Practical use cases in industrial automation

1. Robotic co-workers controlled by intention

Operators can guide robotic arms or drones through subtle neural or muscular signals — for instance, focusing attention on an object to trigger pick-and-place sequences.

In hazardous zones, this enables hands-free control while keeping humans at a safe distance.

2. Adaptive exoskeletons and wearable robotics

Neuro-modules embedded in exoskeletons can detect a worker’s movement intention before it occurs, adjusting motor torque and support dynamically. This reduces fatigue, increases lifting efficiency, and minimizes injury risk.

3. Cognitive workload monitoring in control rooms

Brain-signal monitoring can assess operator fatigue or stress levels in real time. Embedded neuro-sensors detect when attention drops and automatically trigger safety protocols or visual alerts.

4. Hands-free maintenance and inspection

Neural intent recognition enables technicians to interact with augmented reality interfaces or robotic assistants without physical controllers — just by focusing attention or generating simple mental commands.

5. Human-robot collaborative welding, assembly, and machining

In precision processes where timing and motion must synchronize with human decisions, neuro-interfaces can predict operator intent and pre-align robotic motion accordingly.

Integration with existing automation infrastructure

For industrial deployment, neuro-interface modules must speak the same language as the machines they control. That’s why most designs integrate industrial communication stacks such as:

- EtherCAT or PROFINET for high-speed control loops;

- CAN and RS-485 for legacy integration;

- wireless BLE or Wi-Fi for wearable modules;

- and OPC UA for upper-level data analytics and monitoring.

These interfaces allow neuro-modules to plug into PLCs, embedded gateways, or motion controllers with minimal modification — essentially turning the human brain into an additional network node.

Some vendors are now developing neuro-gateways — embedded bridges that translate neural command streams into standardized automation protocols, so existing robotic systems can interpret them like any other control input.

AI and adaptive signal decoding at the edge

One of the biggest breakthroughs in modern neuro-interfaces is adaptive decoding — the ability of AI algorithms to continuously learn and refine their understanding of a user’s neural patterns.

Embedded AI cores can run lightweight neural networks or recurrent algorithms that recognize patterns of intention, stress, or motion preparation.

These models adapt over time, compensating for electrode drift, signal noise, or changes in cognitive state.

The result is a neuro-interface that feels more natural the longer it’s used — improving both accuracy and user comfort.

In multi-user industrial setups, adaptive modules can even switch profiles automatically, recognizing individual operators and loading personalized decoding parameters.

Safety, ethics, and reliability considerations

Brain-machine interfaces in industrial contexts raise complex technical and ethical questions.

- Safety — neuro-modules must include hardware failsafes that prevent unintended machine motion due to signal noise or cognitive distraction.

- Data privacy — neural data is deeply personal; systems must ensure local processing and strict data isolation.

- Reliability — electrodes and amplifiers must withstand long operating hours, sweat, heat, and vibration without degrading signal quality.

- Certification — regulatory frameworks (IEC 61508, ISO 13849, and future neurotech standards) will define how brain-controlled devices enter certified automation environments.

In high-reliability systems, engineers often deploy redundant control validation — the machine executes a neural command only when it matches contextual cues (like gaze direction or muscle activity), reducing false positives.

Engineering challenges ahead

Building industrial neuro-interface hardware remains a multidisciplinary challenge that spans neuroscience, embedded design, and AI. Among the main engineering barriers:

- Signal stability: EEG and EMG signals are weak and easily corrupted by electrical noise from nearby machinery.

- Electrode miniaturization: Developing dry, maintenance-free sensors that remain comfortable for long shifts.

- Edge AI optimization: Running neural inference within tight power budgets (<1 W) on embedded processors.

- System interoperability: Seamless integration into multi-vendor industrial ecosystems without custom gateways.

- User adaptation: Training operators to produce consistent neural patterns without excessive cognitive strain.

Despite these challenges, the field is progressing rapidly. Industrial prototypes already exist that combine embedded AI boards, low-noise amplifiers, and industrial communication modules — forming the foundation of future brain-driven automation systems.

Looking toward the next decade

By 2030, neuro-interface modules are expected to evolve from experimental hardware to standardized components in advanced factories.

We’ll likely see:

- Hybrid neuro-muscular interfaces that combine brain and motion signals for redundancy and accuracy;

- Embedded neuromorphic processors that mimic brain-like computation for real-time control;

- Full integration with digital twins — where the operator’s brain state feeds into simulation models for predictive control;

- Collaborative robotics ecosystems where human intent and machine autonomy blend seamlessly.

In essence, the human nervous system will become a live part of the control architecture — not an external supervisor, but a direct participant in the production loop.

AI Overview: Neuro-Interface Embedded Modules in Industrial Automation

Neuro-interface embedded modules connect human neural activity directly with industrial machines, using edge AI and embedded electronics for real-time intent decoding and control.

Key Applications: robotic co-workers, adaptive exoskeletons, cognitive workload monitoring, AR-assisted maintenance, brain-driven precision manufacturing.

Benefits: intuitive human-machine collaboration, reduced operator fatigue, enhanced safety and productivity, real-time adaptation to human intent.

Challenges: signal stability, safety certification, data privacy, hardware miniaturization, edge AI efficiency.

Outlook: by 2030, brain-machine interfaces will evolve into standard embedded modules for automation, merging neural intelligence with industrial control.

Related Terms: brain-computer interface, EEG embedded module, human-machine symbiosis, neuroadaptive robotics, cognitive automation, edge AI neurotech.

Our Case Studies