Development of Scalable High-Performance Server System Based on CXL Technology

Project in Nutshell:

We developed a scalable server system concept with the CXL technology at the request of a leading provider of high-performance computing and cloud infrastructure solutions. In such a solution, data transfer rates can reach 800 Gbps and a storage capacity of 8192 TB. If implemented, this system will allow our client to establish itself as an industry leader.

Client & Challenge

Our client is a leading provider of high-performance computing and cloud infrastructure solutions, serving companies across a variety of industries, including big data analytics, scientific research, and AI solutions. Its solutions help systems process massive amounts of data and tolerate high computational loads.

The client faced a challenge: the growing demand for high-speed data processing and the inability to meet it with the existing infrastructure based on traditional PCIe connections. Here's what the challenge was related to:

- bottlenecks in PCIe connections slowed down data processing;

- high costs to maintain and scale existing infrastructure;

- limited flexibility and adaptability to support different computing architectures based on CPU, GPU, and FPGA;

- data growth and the need for scalability began to lead to downtime and increased costs.

As a result, the client approached Promwad with a request to develop a CXL-based server system that would solve these problems.

| Compute Express Link (CXL) is an advanced technology that improves communication between computer components and enables high-speed and coherent memory access between processors, accelerators, memory buffers, and non-volatile memory. CXL offers high bandwidth and low latency, and CXL 2.0 switches improve system efficiency by allowing multiple devices to be connected to a single host. The technology reduces power consumption by optimising memory access and resource sharing. In the context of working with different types of memory, CXL provides several benefits:

Companies can use CXL technology to create new solutions and services:

|

Solution

1. Concept Development

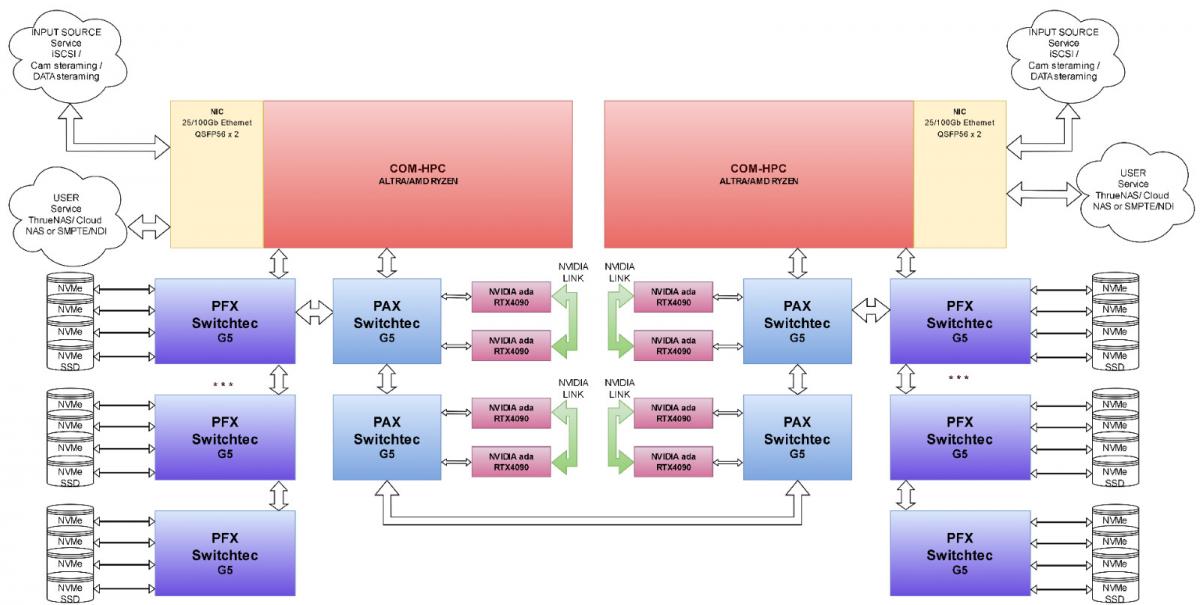

Promwad scrutinised the customer's request and proposed a solution based on COM-HPC, an open standard for embedded computing modules, and Switchtec™ Gen 5 switches with firmware-level control from Microchip.

The PFX and PAX Switchtec™ Gen 5 switches are designed to be versatile, allowing for the construction of various systems with the ability to adapt the usage vector to any possible fractionalisation.

Scalable multi-host+PFX/PAX+PFX CXL switching developed at Promwad

2. Hardware Design

The Promwad engineers select the following components for designing a multi-host system concept and fulfilling customer requirements:

- A COM-HPC carrier board manages communication and data flow between different components of the system.

- Switchtec™-based high-speed, scalable backplane to provide high-speed connectivity between multiple devices.

- The Nvidia Mellanox NIC PCIe card connects the system to high-speed networks and enables fast data transfer.

- Two Nvidia GPU cards (full-size PCIe format) are used to perform compute-intensive tasks such as modelling, artificial intelligence, and big data analytics in HPC systems.

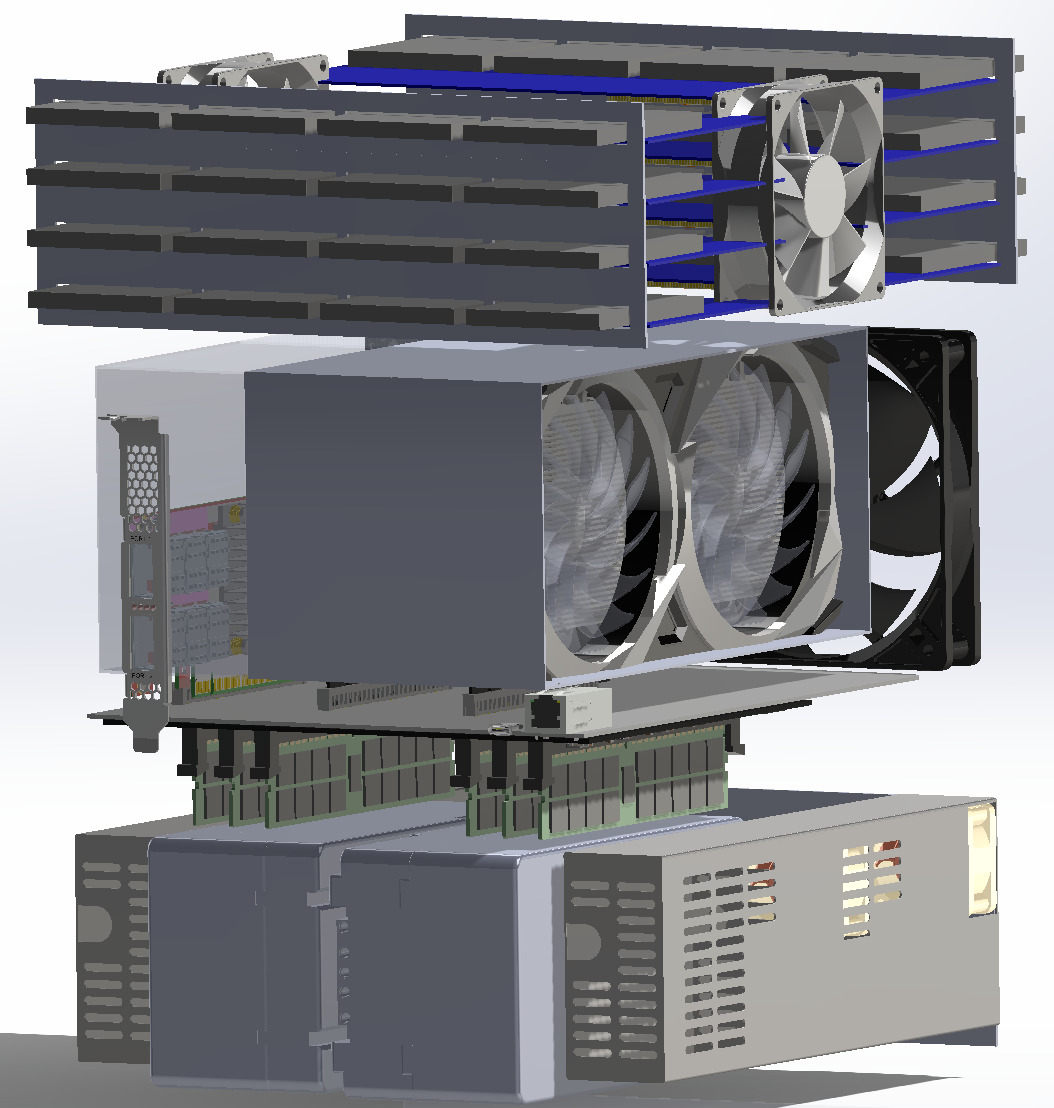

3D model of a fully functional system cluster for a multi-host system

3. Software Development

Our engineers also considered and incorporated the following software components into the concept:

- a file manager based on DPDK/SPDK and low-level firmware control for the Switchtec™ components;

- low-level firmware for power systems and system health control;

- web UI/UX;

- system control middleware and software;

- middleware layer for the file manager (capturing/recording);

- and other software components encapsulated in Docker and Kubernetes: server system, server user control interface, user data source subsystem control, user service (capturing/recording/transcoding/streaming), and backend.

4. System Specifications

The newly designed system has the following characteristics:

- Networking bandwidth:

- 200Gb/s for single host architecture;

- 400-800Gbs for multi-host architecture.

- Storage recording bandwidth:

- 16GB/s for single host architecture;

- 32GB/s for multi-host architecture.

- Storage recording capacity:

- from 16 hot-pluggable NVMe to 32, up to 1024TB for single host architecture;

- from 32 hot-pluggable NVMe to 256, up to 8192TB for multi-host architecture.

There is a comparison of the main key parameters for various system configuration in the table below:

|

| Half System | Advanced Half System | Full System |

| Networking bandwidth, Gb/s | 200 | 400 | 800 |

| Storage recording bandwidth, GB/s | 16 | 24 | 32 |

| Storage recording capacity, TB | 1024 | 4096 | 8192 |

| CPU threads amount, virtual thread quantity estimated | 32 | 64 | 128 |

| GPU/CUDA threads amount, virtual thread quantity estimated | 2048 | 3072 | 4096 |

Business Value

As a result, our client now has a robust and scalable server system concept that can deliver up to 800 Gbps transfer speeds and 8192 TB of storage capacity.

Modernisation of the system will allow the company to reach a new market position, retain current clients, and attract new ones due to its reliability and high speed of data transmission.

More of What We Do for High-Performance Data Processing

- Enterprise NAS: a case study of developing a NAS storage system with DPDK / SPDK, improving data transfer speed and network efficiency.

- SPDK & DPDK: our experience in applying SPDK and DPDK technology to create complete solutions for storage and other networking applications.

- Promwad Embarks Ampere ARM: сheck out our comparison of Ampere's ARM processors with Intel chips for cloud computing and storage