From Specification to Silicon: LLM-Aided Design Accelerates Hardware and Embedded Engineering

In 2025, hardware and embedded system design is entering a new phase—one where large language models (LLMs) aren’t just assistants for documentation or code snippets, but active co-designers across the flow. From writing RTL from natural language, to generating testbenches, to exploring microarchitecture trade-offs, LLMs are helping engineers shorten iteration cycles, reduce human error, and push features faster to market.

For embedded platforms and specialized hardware, time-to-market is often the differentiator. A faster design cycle means earlier deployment, more design refinement, and a chance to capture market windows. Traditional design flows involve layers of manual effort—specification translation, module scaffolding, verification, and constraint tuning. LLMs can intervene at many of these layers, automating repetitive tasks, offering design suggestions, and integrating feedback from synthesis and simulation tools to refine outputs.

Below, I walk through how LLMs are being used today in hardware and embedded engineering, the parts of the design flow they influence, real examples from research and practice, key caveats, and strategies to adopt LLM-aided design without sacrificing quality or reliability.

Why LLMs Matter in Hardware & Embedded Design

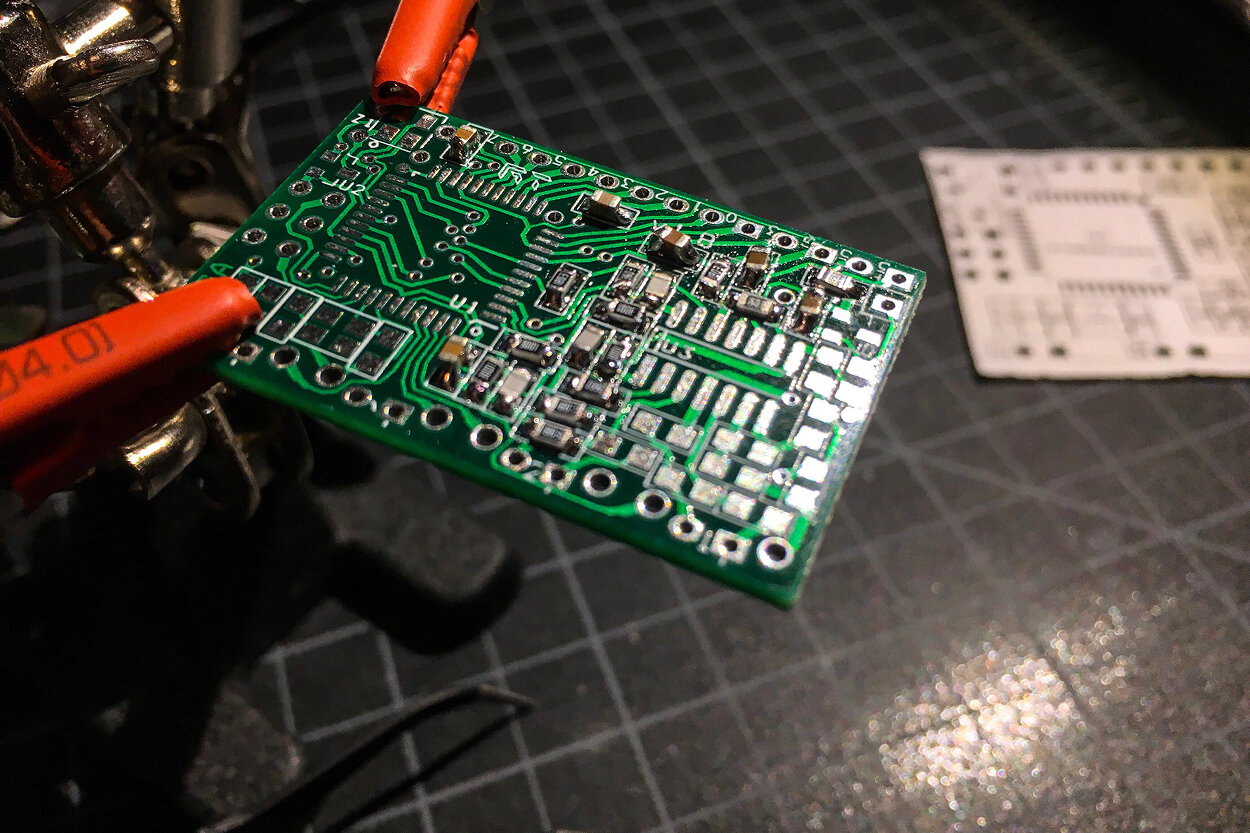

Hardware design is complex. Engineers must translate high-level requirements into precise RTL, balance area, power, timing, and match constraints across synthesis, placement, routing, and verification. Embedded systems add further constraints—memory, power, thermal, I/O, and integration with software stacks. Every manual detail, error, or delay in this chain can push delivery out by weeks or months.

LLMs offer an opportunity to automate and accelerate parts of that chain. Unlike rule-based tools, they can ingest natural language specifications, contextual code, and even design history, and produce structured outputs—HDL, testbenches, assertions, constraints, or even alternative microarchitectures. They are particularly valuable in scaffolding, repair, suggestion, and verification roles.

Recent academic work (e.g. “LLM-Aided Efficient Hardware Design Automation”) shows that LLMs can generate HDL modules, debug RTL, and even assist in testbench generation. arXiv+1 The LLMCompass framework allows exploring design space by sketching trade-off estimates before writing full RTL. August Ning HLSPilot, another emerging tool, uses LLMs to translate C/C++ to HLS code, integrate design space exploration for pragmas, and aid hybrid CPU–FPGA systems. arXiv

These tools suggest LLMs are useful not only as helpers but as integrated members of the design stack, offering suggestions, validation, and iterative evolution.

Where LLMs Help: Flow Stages & Use Cases

1. Specification & Module Scaffolding

One of the first friction points in hardware design is turning requirements into module outlines. LLMs can parse natural language or structured specs and produce module skeletons in Verilog or VHDL—complete with I/O ports, state machines, and stub logic. Engineers then fill or refine the logic. This saves boilerplate time and reduces mismatch with spec.

2. RTL Generation & Repairs

LLMs can generate synthesizable RTL modules from prompts, and more importantly, repair or patch existing RTL based on error logs. For example, if synthesis or simulation fails with a specific error, the LLM can ingest the error and suggest fixes (e.g. missing signal assignments, mismatched widths, state machine transitions). This feedback loop between tools and model accelerates debugging.

3. Testbench & Assertion Creation

Testing is critical, and writing testbenches, random stimulus, and assertions is time-consuming. LLMs can generate test harnesses, coverage goals, SystemVerilog assertions (SVA), or directed stimuli. Because these can be templated and generalized, LLMs help reduce verification backlog.

4. Constraint & Optimization Suggestions

Placement and routing, timing constraints, clock domain crossings, pipeline balancing—all these require hand tuning. LLMs can analyze RTL and suggest pragmas, constraints, or pipeline buffering decisions. They can help set constraints that meet timing margins, informed by design patterns and past data.

5. Design Space Exploration & Trade-Off Advising

Before writing full RTL, engineers often sketch multiple microarchitectures (e.g. array sizes, pipeline depth, parallelism). LLMs, aided by frameworks like LLMCompass, can propose and compare multiple candidates, estimate area, latency, or power qualitatively, and help choose a direction before heavy investment. August Ning

6. HLS & C-to-Hardware Paths

Because the semantic gap from natural language to RTL is wide, some flows use intermediate C/C++ or HLS. HLSPilot uses LLMs to generate C/C++ code amenable to hardware synthesis, inject pragmas, and then iteratively refine critical kernels to convert into optimized hardware blocks. arXiv

7. Mixed RTL + Verification Integration

LLMs can help weave together RTL and verification flows—for example, generating assertions corresponding to design spec sections, or auto-updating testbenches when RTL changes. This integration reduces drift between logic and tests.

8. Documentation, Review, and Knowledge Transfer

Beyond code, LLMs assist in generating design documentation, spec-to-code traceability, and peer review feedback. They can spot inconsistencies or missing invariants and flag code segments with low coverage relative to spec.

Real-World & Research Examples

- The “LLM-Aided Efficient Hardware Design Automation” paper explores LLMs generating HDL, debugging, and producing testbenches. arXiv+1

- ChipGPT presents a framework that ingests natural language spec and auto-generates Verilog modules, optimizing selection among candidate variants. arXiv

- HLSPilot shows a practical pipeline where LLMs convert sequential C/C++ into HLS-friendly code, optimize pragmas, and produce hardware-accelerated kernels. arXiv

- The EmbedAgent benchmark framework simulates the roles of embedded system programmers, architects, and integrators to test LLM abilities on hardware design tasks—from component selection to platform integration. arXiv

These cases demonstrate that LLM-aided design is already moving beyond toy demos into useful time-saving augmentation.

Benefits: Why Engineers Use LLM-Aided Design

- Faster iteration cycles: scaffolding, repair, and testing cycles compress from hours to minutes.

- Reduced repetitive effort: frees engineers from boilerplate or error-prone tasks and lets them focus on core innovation.

- Lower barrier to entry: designers with less HDL experience can prototype logic faster, accelerating embedded team productivity.

- Consistency and traceability: prompts, model output, and feedback loops form a reproducible audit trail.

- Smarter suggestions from history: trained or finetuned LLMs can internalize domain knowledge, hints, or best practices, surfacing design choices that align with past success.

As LLMs move deeper into hardware and embedded design flows, the critical question is no longer what they can generate, but what engineers are actually willing to rely on. A pragmatic look at trust boundaries for LLMs highlights why successful teams restrict models to scaffolding, verification assistance, and exploration, while keeping architectural decisions and final accountability firmly human-owned. Understanding these boundaries helps prevent over-automation and ensures that speed gains do not come at the cost of correctness, timing closure, or long-term maintainability.

Caveats, Risks & Quality Controls

LLMs are powerful, but not infallible—especially in hardware design where correctness, timing, and power matter. Some risks and necessary guardrails:

- “Hallucinations” and logical bugs: The model might generate syntactically correct but functionally incorrect logic. Every LLM-generated module must be verified with simulation, formal methods, or synthesis.

- Over-reliance and complacency: Engineers must not accept suggestions blindly. Human review, coverage-driven testing, and assertion-based checking remain essential.

- Scaling to full systems: LLMs struggle generating large hierarchical SoC systems out-of-the-box; they are best as helpers in modular design, not full-SoC autopilots.

- Prompt engineering complexity: Crafting precise prompts, feedback loops, and context windows is nontrivial and requires tuning.

- Versioning and drift: As design constraints, processes, and tools evolve, the underlying LLM must update; stale models may propose invalid code.

- Integration with toolchains: The LLM outputs must integrate seamlessly with synthesis, timing analysis, place-&-route tools, and version control. Mismatches in naming, library assumptions, or constraints can break flow.

How to Adopt LLM-Aided Design in Practice

- Start small, pick a use case

Choose a module or component (interface, FIFO, controller) where repeatable patterns exist. Use prompts to scaffold, then validate.

- Use a loop of generation → compile → feedback → refine

Embrace an interactive process. The model suggests, you synthesize or simulate, you feed errors back and ask for repair.

- Build a prompt library & design patterns

Curate prompt templates, domain context, naming conventions, and code styles so outputs remain consistent.

- Integrate with verification early

Automatically generate assertions or testbenches alongside RTL, and require HDL to pass tests before merging.

- Version model and design artifacts

Track which prompt, model version, and context led to a generated module—so you can reproduce or revert.

- Monitor quality metrics

Track how many generated modules survive unedited, how many require repair, their coverage, area/timing quality. Adjust prompts or provide fine-tuning.

- Hybridize with human-in-the-loop

Always treat LLM as collaborator, not replacement; assign review, refactor, and final responsibility to engineers.

- Gradually broaden scope

Once module-level workflows stabilize, increase exposure to verification, constraint tuning, architectural exploration.

What This Means for Embedded and Hardware Engineering

LLM-aided design is not a magic bullet, but it is a powerful accelerator. Especially in embedded and hardware domains, where time, complexity, and iteration cost dominate, adding an AI co-designer helps reduce friction across the stack—from module scaffolding to verification to constraint tuning.

When a company can prototype a new hardware feature or subsystem in days instead of weeks, it gains agility. More design variants can be explored. Embedded teams can experiment more aggressively. Legacy codebases can be refactored faster. Even small hardware teams can compete with larger ones by scaling productivity.

For the industry, this signals a shift: design tools and EDA flows will evolve toward “AI-native” architectures. LLM backends, feedback integrations, prompt-based interfaces, verification-aware generation, and co-designed tool chains will become expectations—not experiments.

If your organization builds hardware, FPGA, or embedded platforms, adopting LLM-aided design is no longer optional—it is a strategic enabler. But do it safely: start modular, validate aggressively, and treat the model as a partner, not a replacement.

AI Overview: LLM-Aided Hardware & Embedded Design

LLM-Aided Design — Overview (2025)

LLM-aided design uses large language models as co-designers in hardware and embedded development—generating RTL, testbenches, constraints, and exploring architecture—thus accelerating iteration and reducing time-to-market.

Key Applications:

- Natural language to RTL conversion and scaffolding

- Testbench/Assertion generation and verification support

- Design space exploration and optimization suggestions

- C-to-HLS translation and mixed CPU–accelerator flows

Benefits:

- Faster design cycles and reduced manual boilerplate

- Higher productivity for teams with limited resources

- Consistency and embedded design knowledge in prompts and templates

Challenges:

- Ensuring correctness, managing hallucinations, and prompt stability

- Integrating generated artifacts into tool flows and version control

- Scaling to complex systems and updating model to match process drift

Outlook:

- Short term: module-level adoption and verification-augmented pipelines

- Mid term: deeper integration into EDA tools and co-design environments

- Long term: autonomous AI-assisted design environments where human engineers oversee, refine, and direct rather than hand-code

Related Terms: hardware co-design, RTL generation, design automation, embedded engineering, HLS, verification, prompt engineering, model repair.

Our Case Studies