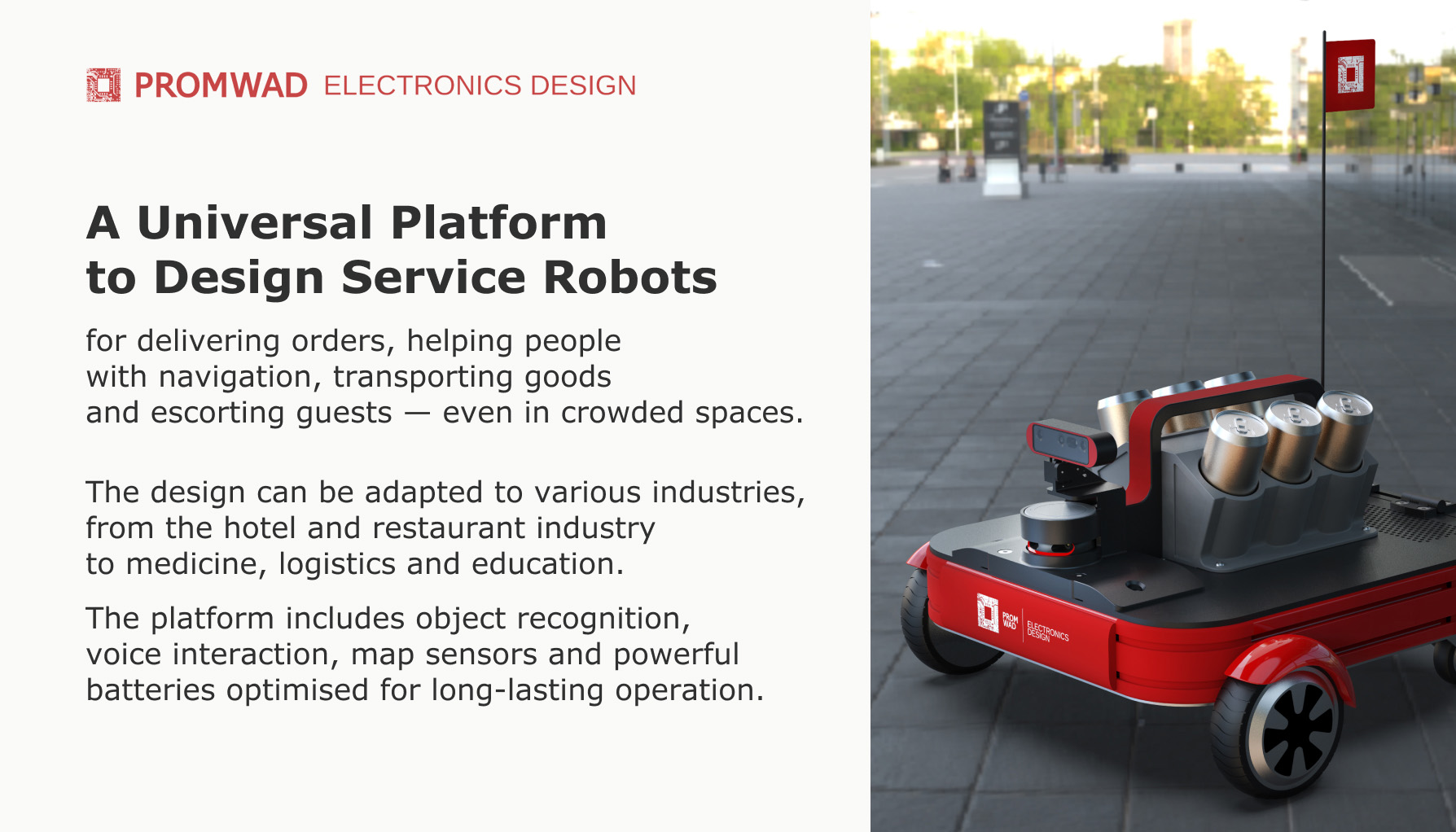

Autonomous Mobile Robotic Kit: Service Robots from Concept to Application

Project in a Nutshell: The Promwad team developed an engineering kit to design custom AI-powered service robots for autonomous parcel delivery and escorting guests. This design can be applied in various industries, from hotels and restaurants to medicine, logistics and education.

This adaptable platform is based on the Qualcomm Dragonwing™ RB3 Gen 2 Dev Kit and Hilscher NXHX 90-MC Dev Board to ensure safe navigation even in crowded places. Neural networks for object recognition are integrated into the system: they allow the robot to accurately distinguish people from other obstacles.

The platform includes voice interaction, map sensors and powerful batteries optimised for long-lasting operation.

Challenge

Promwad set a goal to create a mobile robot to serve drinks to visitors at tech events and showcase the capabilities of our universal platform for custom service robots design.

Although the project is not intended for commercial use, our goal was to create an engineering kit with navigation and control systems and a compact, easily assembled enclosure.

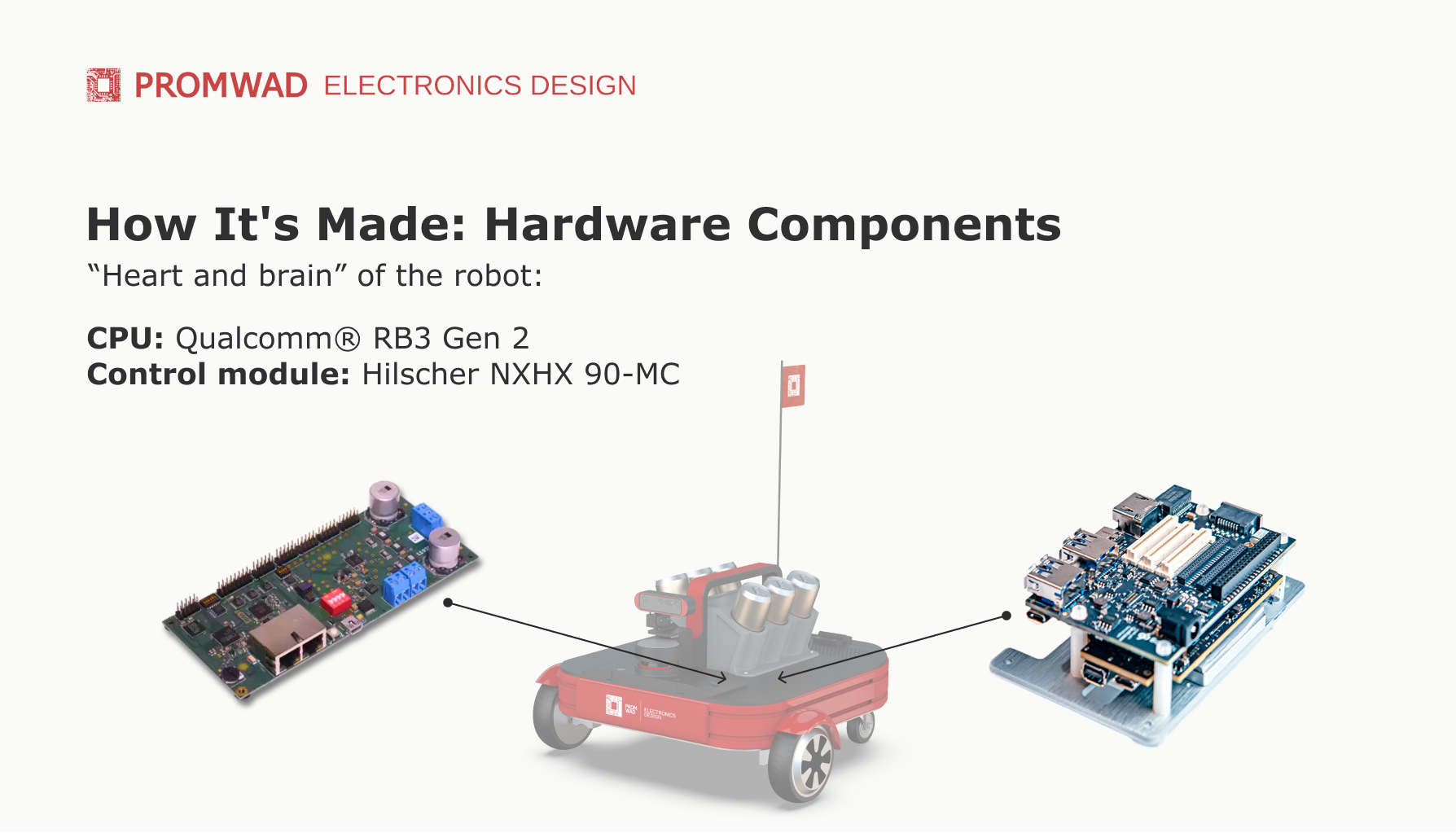

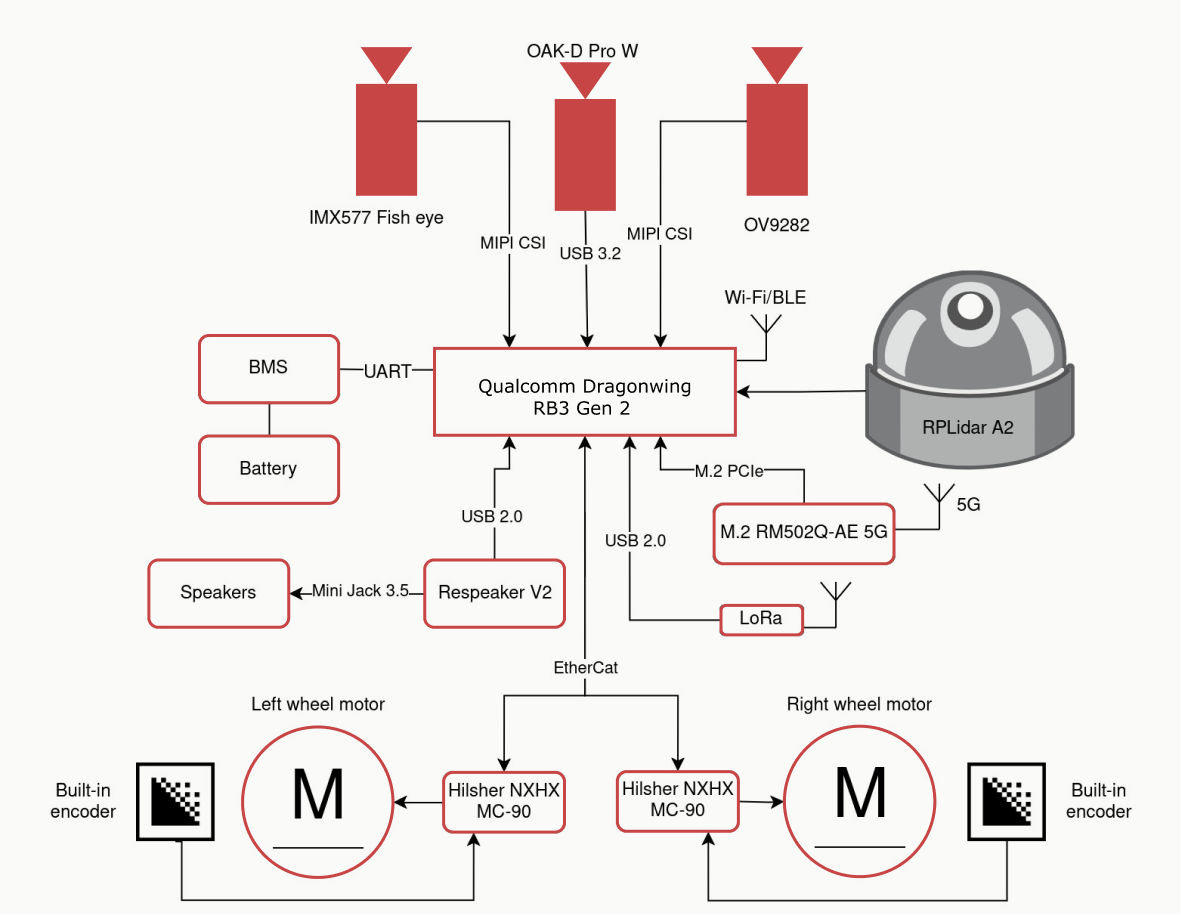

1. Hardware Design

The platform's hardware architecture integrates various sensor, communication and control components. The central computing unit of the system is the Qualcomm Dragonwing™ RB3 Gen 2 Dev Kit, which integrates all components.

Hardware architecture block diagram: The central computing unit of the system is the Qualcomm Dragonwing™ RB3 Gen 2 Dev Kit, which integrates all components. and its integrated components

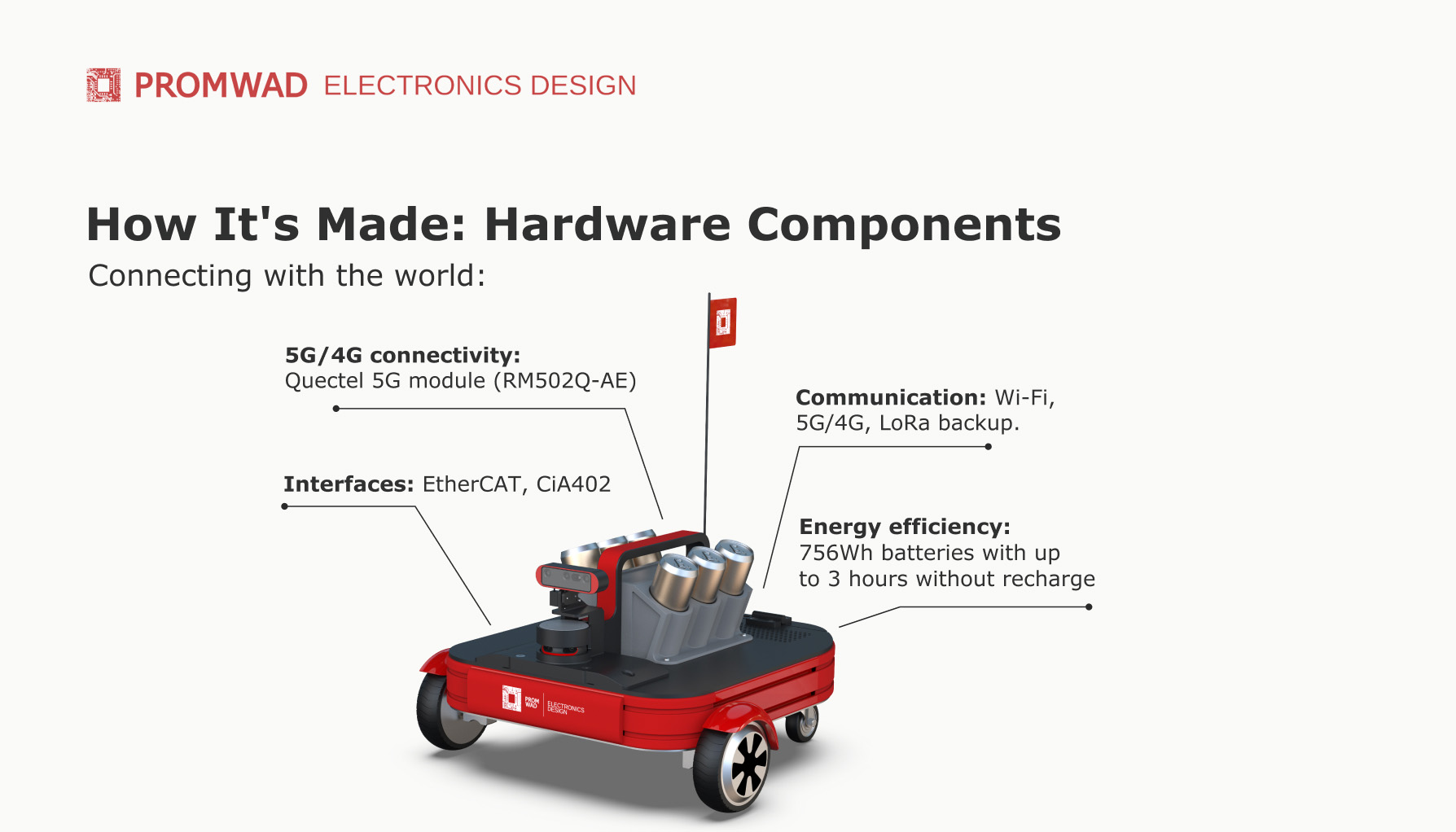

The Qualcomm® RB3 platform provides wireless platform connectivity over multiple channels:

- Wi-Fi: basic indoor connectivity.

- 5G/4G supported by the M.2 RM502Q-AE 5G modem via M.2 PCIe connection, used when Wi-Fi coverage is not available.

- LoRa: redundant channel for telemetry and basic control, providing communication in low coverage areas.

Navigation with Lidar Mapping & Cameras for 360-View

The RPLidar A2 is the primary sensor for localisation and mapping. It provides 360-degree laser scanning to detect obstacles and create 2D maps of the environment. While this is essential for accurate localisation and map updating, the lidar's field of view does not cover approximately 90 degrees behind the robot: we compensated for this limitation with computer vision algorithms using a rear-mounted IMX577 fisheye camera.

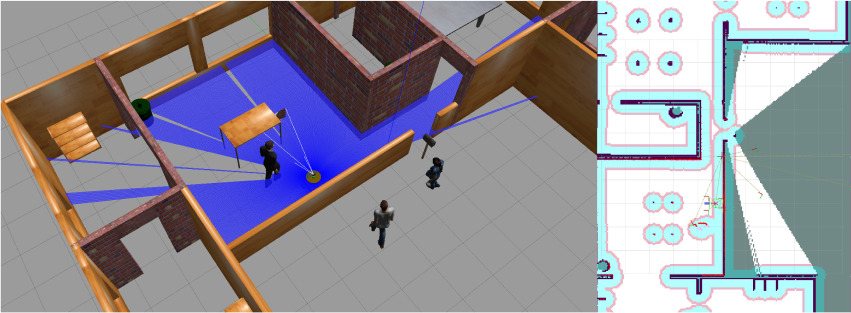

The appearance of the robot simulation with the human simulation and the robot navigation map at the same moment. There is one person in the robot's line of sight

Additionally, for navigation accuracy, the platform is equipped with the OAK-D Pro W and OV9282 cameras. The front-mounted OAK-D Pro W depth camera supports spatial orientation and navigation by providing point cloud data and detecting people for social navigation tasks. The OV9282 camera will be used for ceiling navigation.

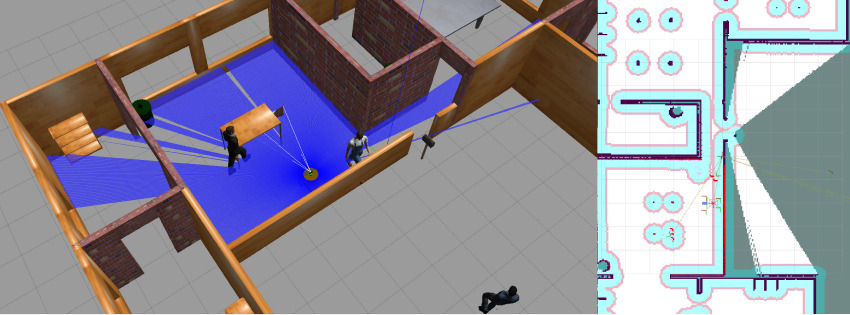

Robot simulation and navigation map, but there are two people in the robot's line of sight

Sound Processing & Voice Control

For sound processing, we integrated the Respeaker V2 module with ODAS (Open Embedded Audition System). This solution provides localisation of the sound source and isolation of specific speech from the background noise. Various audio messages and signals are broadcast through the speakers.

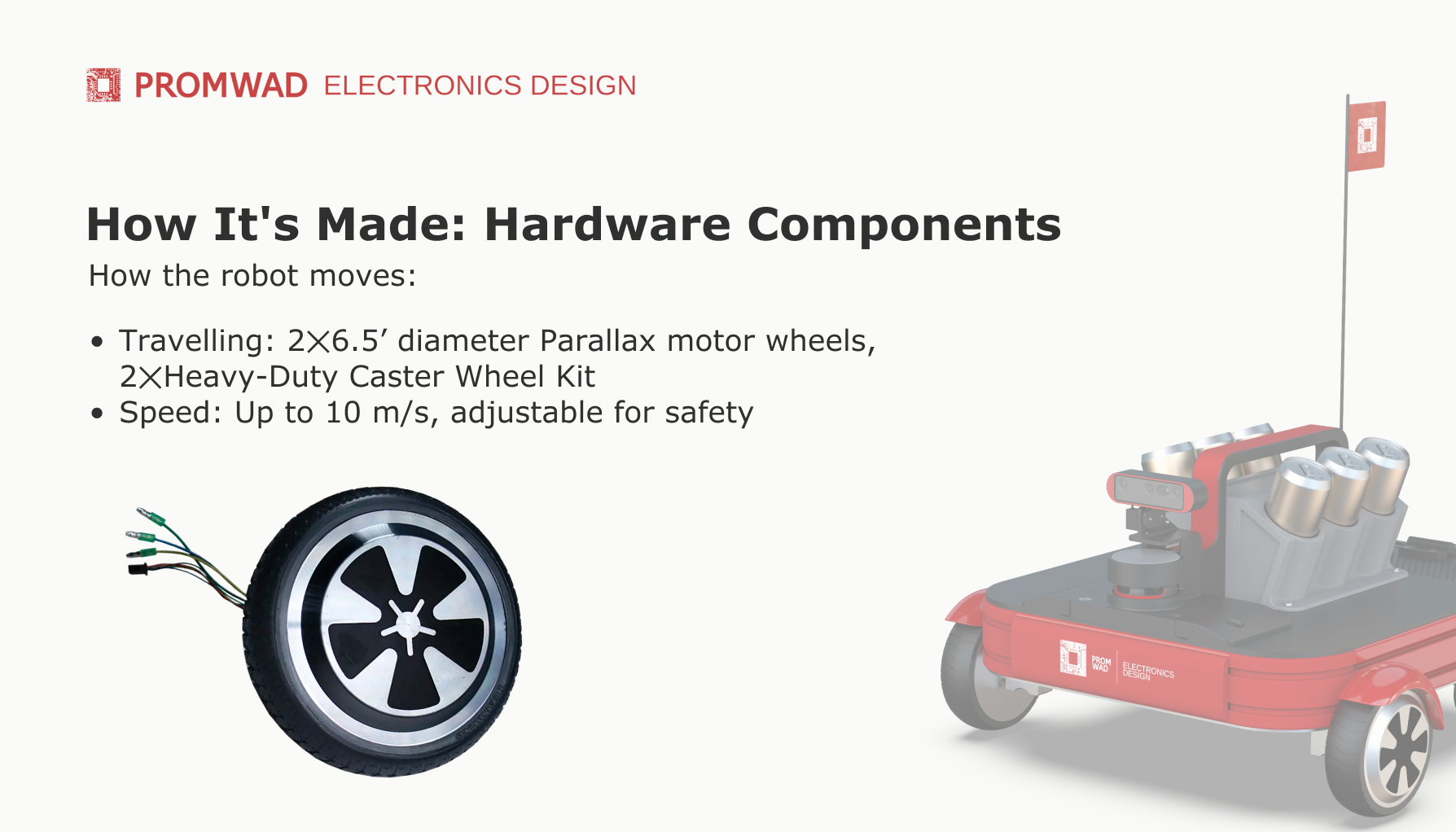

Travelling

The platform has two powerful 6.5’ diameter Parallax motor wheels with built-in encoders and 250W of power each. They provide stability, manoeuvrability and fast response to commands. Two passive wheels from the Heavy-Duty Caster Wheel Kit are used to maintain balance and minimise power consumption.

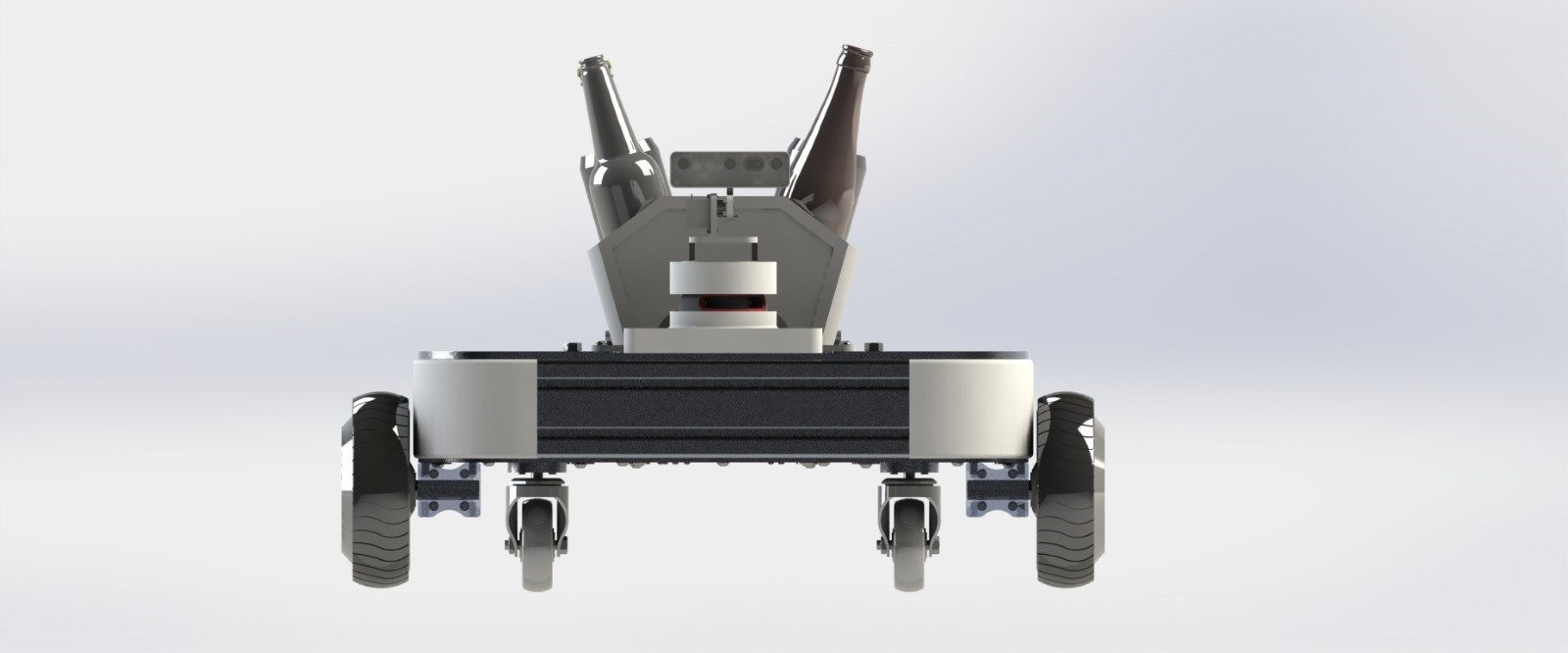

The platform has two 6.5-inch motor wheels for manoeuvrability and two passive wheels for balance and energy efficiency

The maximum travelling speed is 10 m/s. Actually, the platform can reach even higher values but will be deliberately limited by software to meet the safety standards of public events.

The motors are controlled by the Hilscher NXHX 90-MC, which utilises the EtherCAT interface for fast and reliable data transfer and the CiA402 standard for drive control. The module is configured via an ESI file, allowing flexible customisation. This architecture simplifies system integration, low latency, high throughput and synchronisation of all components. The control profile supports three main modes:

- positioning;

- speed mode;

- torque control.

Battery & Power Management

The power management subsystem includes a 350Wh 10S3P battery with a UART-connected battery management system that provides 1.5 hours of full power operation. In real-world applications, this time can be extended to three hours. A full charge takes 4.2 hours.

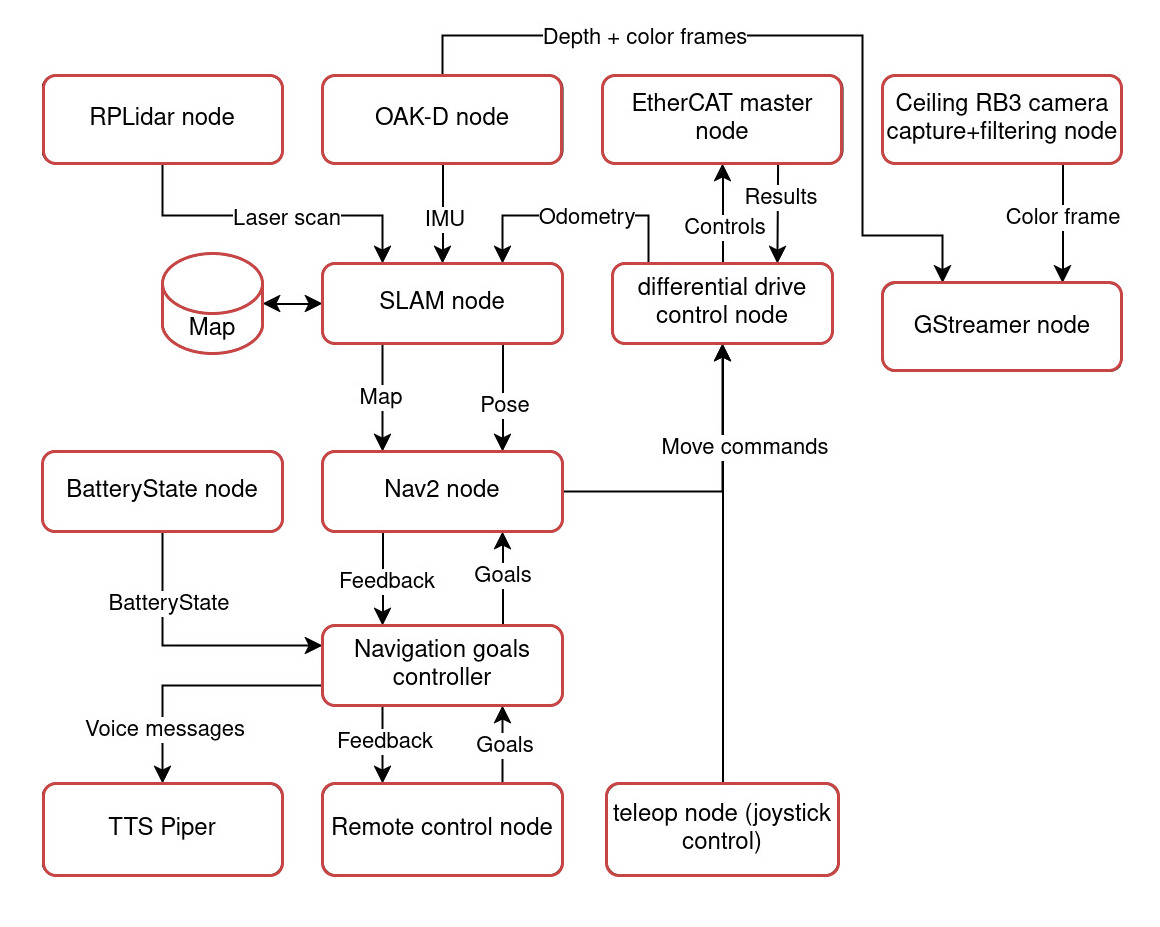

2. Software Development

The engineering kit is equipped with all levels of software for full functioning, it has all essential modules for specific tasks, including:

- Remote control node.

- Sensor nodes for lidar and cameras.

- EtherCAT master node for controlling EtherCAT networks. We used SoC QCS6490 on RB3 Gen2 to run EtherCAT master stack with the iCube library for ROS2. It is based on the IgH EtherCAT Master driver by Etherlab and enables its integration into the ros2_control stack.

- BatteryState node for monitoring the robot's battery status.

- SLAM node for localisation and map construction (SLAM).

- Nav2 node for path planning and platform navigation.

- Differential drive control node receives commands from Nav2 or Teleop and converts them into commands for the motors, allowing the robot to move along predetermined paths.

- Navigation goals controller for controlling the platforms's navigation goals.

- Teleop node for manual operation with a joystick.

- GStreamer for transmitting video data from cameras.

- Text-to-Speech (TTS) Piper for generating speech from text messages.

- Gazebo for the physical simulation of the robot and its interaction with the environment.

- HuNavSim for simulating human behaviour, providing a more realistic environment for testing navigation algorithms.

Block diagram of ROS2 project nodes

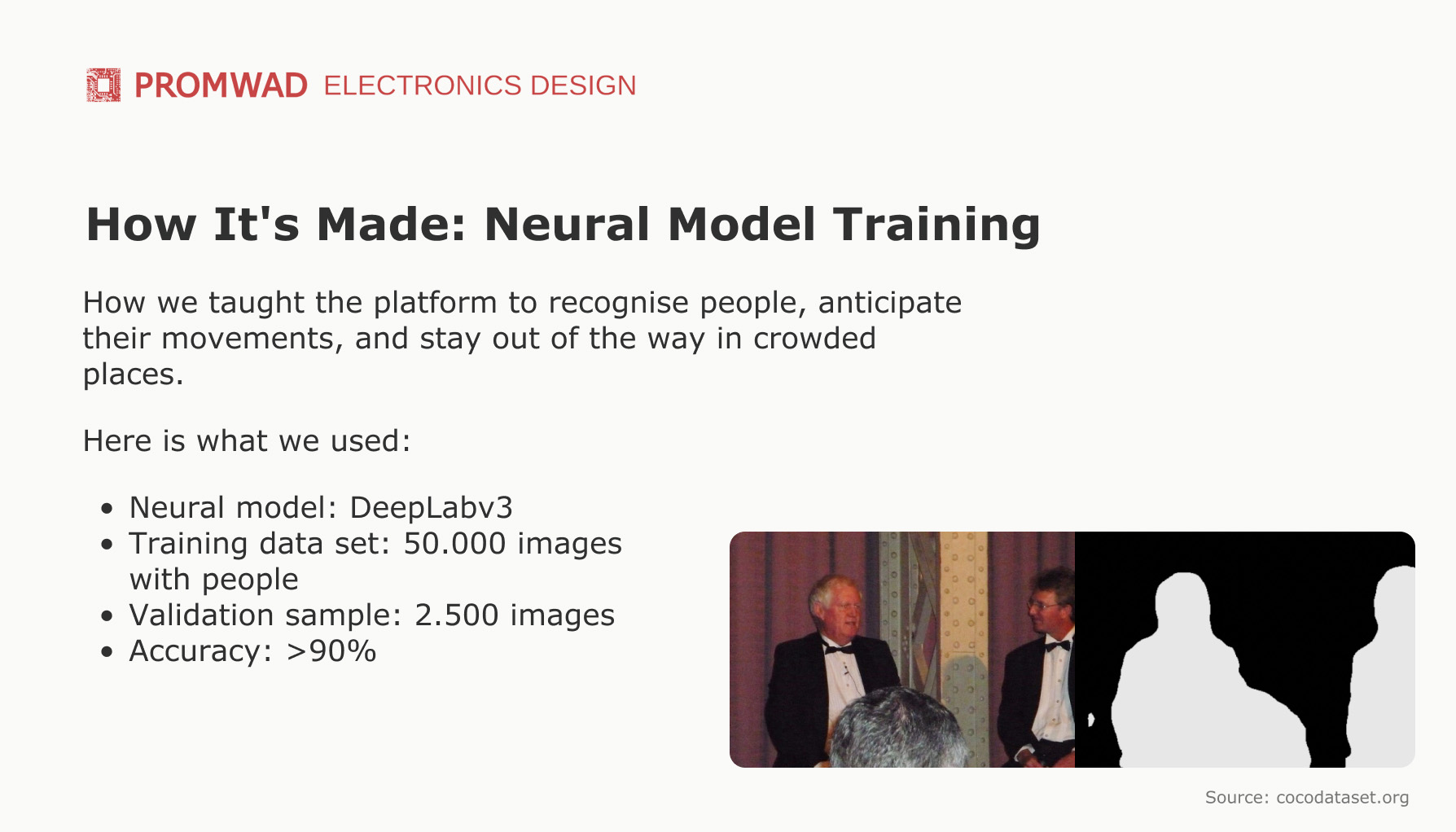

3. Neural Model Training

Since the platform is designed to develop robots for operation in crowded environments, accurate obstacle recognition and motion prediction are essential for safe and precise navigation.

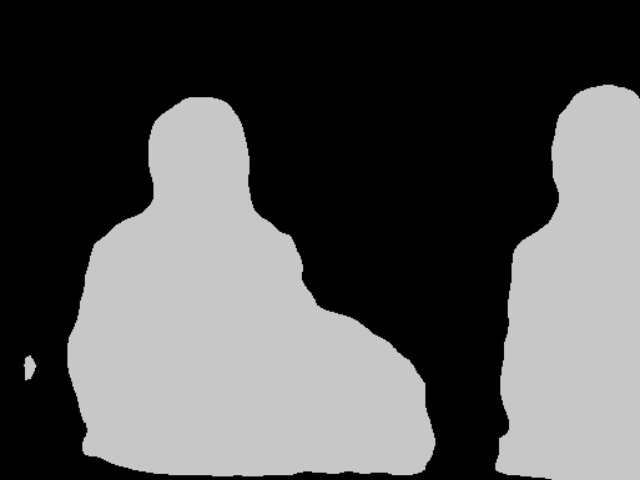

Lidar data cannot be the only source of navigation information, as the presence and movement of people create significant errors in the calculations. To solve this problem, we developed a technique to exclude lidar points related to people by detecting them. We applied semantic segmentation, a computer vision technique where each pixel of an image was classified as belonging to a specific class (“human” in our case) and used a binary mask to separate humans from other objects.

In the image on the left is the original photo, and on the right is the so-called segmentation mask where all pixels belonging to the “human” class have a grey colour to separate the background. During training, the model repeatedly looks at such pairs (original + mask) and adjusts its algorithms to more accurately determine which pixels belong to people each time.

Image segmentation using DeepLabv3 neural model. Source: cocodataset.org

For image segmentation, we chose the DeepLabv3 model, which is based on the MobileNet architecture. Its key feature is dilated convolutions, which increase the receptive field of the model for accurate segmentation.

We trained the model on a portion of the COCO image dataset with people. As a result, the training sample was more than 50 thousand images, and the validation sample was more than 2.5 thousand images.

We used the Intersection over Union (IoU) metric to evaluate the quality, which shows how much the predicted and annotated masks overlap and match. On validation, the model achieved an IoU score of over 90%.

Weights were quantised after the training to optimise the model on the Qualcomm Dragonwing™ RB3 Gen 2 Dev Kit. This reduced the memory footprint and improved model performance, ensuring stable and accurate segmentation under real-world conditions.

4. Enclosure and Mechanical Design

The platforms's mechanical design is made of a sturdy aluminium profile with laser cut and 3D printed parts, making it easy to assemble and eliminating the need for specialised tooling.

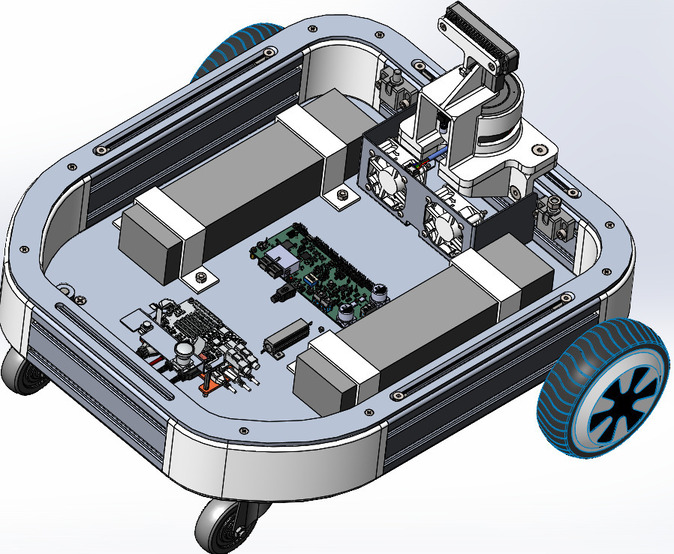

A base of the robotic kit body with key control components

The base of the platform’s enclosure contains the control elements:

- a machine vision module;

- a lidar;

- a microphone;

- batteries;

- fans;

- the Qualcomm Dragonwing™ RB3 Gen 2 Dev Kit;

- the Hilscher NXHX 90-MC development kit.

Business Value

The engineering robotics kit enables our clients to enter the service robotics market efficiently. With this off-the-shelf solution, they can reduce development time and costs while customizing their design to meet the specific needs of various industries, such as hospitality, warehousing, and healthcare.

The platform’s sophisticated navigation, obstacle avoidance and human awareness features make it ideal for environments requiring safe and autonomous service.

More of What We Do for Robotics

- Robotics Engineering: explore our robotics development services for a variety of applications.

- Nvidia Robotics Platform with Industrial Protocols: a case study on the development of a first-of-its-kind platform with support for popular industrial networking protocols

- SRCI with PROFINET: check out our study of the SRCI interface for robot control using PLCs from different manufacturers.