Adaptive Memory Hierarchies: Combining SRAM, MRAM, and ReRAM for Smarter Edge Systems

In the rapidly evolving world of edge and embedded computing, memory is the new bottleneck. As AI inference, real-time analytics, and data-rich sensors migrate closer to the edge, devices must process and store information faster than ever — yet within tight power and cost constraints.

For decades, designers have relied on SRAM and DRAM as primary fast-access memory and Flash for non-volatile storage. But this traditional hierarchy is breaking down. Data-intensive workloads like AI inference, sensor fusion, and neuromorphic computing require adaptive memory systems that can balance speed, endurance, and retention dynamically.

The emerging solution: hybrid memory hierarchies combining SRAM, MRAM, and ReRAM, each optimized for a specific layer of the data stack. Together, they form a new architecture where memory adapts to workload demands — a crucial innovation for edge AI and ultra-low-power embedded devices.

The edge memory problem

Edge devices must deliver cloud-level performance while operating under strict energy budgets. They can’t rely on DRAM refresh cycles, nor can they afford Flash’s slow write speeds.

The main challenges are:

- Latency: AI inference requires nanosecond-level memory access.

- Energy efficiency: DRAM consumes significant power for refresh and data movement.

- Endurance: Flash wears out after ~10⁴ write cycles — unacceptable for continuous data logging.

- Scalability: Edge SoCs need high-density, low-cost solutions without external memory modules.

This combination of constraints has led researchers to rethink memory not as a static hierarchy but as a dynamic ecosystem that can reconfigure itself based on real-time workloads.

The new triad: SRAM, MRAM, and ReRAM

Let’s break down the strengths and weaknesses of the three leading technologies and how they complement one another.

1. SRAM (Static RAM)

- Speed: The fastest form of embedded memory — used for caches and register files.

- Power: High leakage, volatile (data lost when power off).

- Use case: AI inference buffers, compute caches, and low-latency scratchpads.

SRAM remains indispensable for near-processor memory. However, as process nodes shrink, its static leakage becomes a major concern in battery-powered systems.

2. MRAM (Magnetoresistive RAM)

- Speed: Comparable to SRAM in read latency; slower writes but improving.

- Power: Non-volatile; no refresh cycles.

- Endurance: High — up to 10¹⁵ cycles.

- Use case: persistent caches, AI weight storage, secure boot memory, and instant-on applications.

MRAM offers a bridge between volatile and non-volatile domains. It retains data through power loss and supports frequent updates, making it ideal for mission-critical embedded systems and AI models that need persistent state.

3. ReRAM (Resistive RAM)

- Speed: Moderate, depends on implementation (typically slower than MRAM).

- Power: Extremely low, non-volatile.

- Endurance: 10⁶–10⁹ cycles.

- Use case: inference weight storage, in-memory computing arrays, and neuromorphic crossbars.

ReRAM’s ability to represent analog conductance levels allows it to act as both storage and compute medium, merging memory with processing — a foundation for in-memory AI accelerators.

Why combine them?

No single memory technology can meet the demands of modern embedded systems. SRAM offers speed but not retention; MRAM offers persistence but with higher write power; ReRAM offers density but not throughput.

By combining all three, designers can build adaptive hierarchies where:

- SRAM handles fast access and temporary caching.

- MRAM manages mid-term retention and frequent writes.

- ReRAM provides long-term, energy-efficient storage or AI weight memory.

In effect, the memory system becomes workload-aware, choosing the right memory for the right task.

For instance, an edge AI sensor analyzing sound patterns can:

- Capture data in SRAM for immediate inference.

- Store intermediate weights and results in MRAM.

- Log historical context or model snapshots in ReRAM.

This flow minimizes energy use and maximizes endurance — all within a compact SoC footprint.

The rise of adaptive memory controllers

At the heart of these architectures lies the adaptive memory controller, a smart management layer that dynamically allocates workloads based on latency, endurance, and power constraints.

These controllers can:

- Adjust memory allocation based on AI model activity (e.g., switch MRAM to active mode during inference, ReRAM during idle).

- Employ predictive caching to anticipate hot data blocks.

- Use AI-assisted policies to monitor wear levels and redistribute writes intelligently.

- Enable data tiering, similar to SSD-level caching — but inside a single SoC.

Such systems are already emerging in research and early silicon from companies like TSMC, Everspin, Crossbar, and Weebit Nano, who are experimenting with mixed MRAM/ReRAM memory stacks for embedded AI.

Power and performance impact

The gains of hybrid memory architectures can be dramatic:

- Up to 80% reduction in idle energy by eliminating DRAM refreshes.

- 3× increase in write endurance through intelligent wear-leveling.

- 50% smaller system footprint versus discrete Flash + SRAM configurations.

- Instant-on recovery after power loss thanks to MRAM’s non-volatility.

In AI inference use cases, combining SRAM and ReRAM allows in-memory matrix multiplication with minimal data movement — cutting energy consumption by more than 90% compared to DRAM-based approaches.

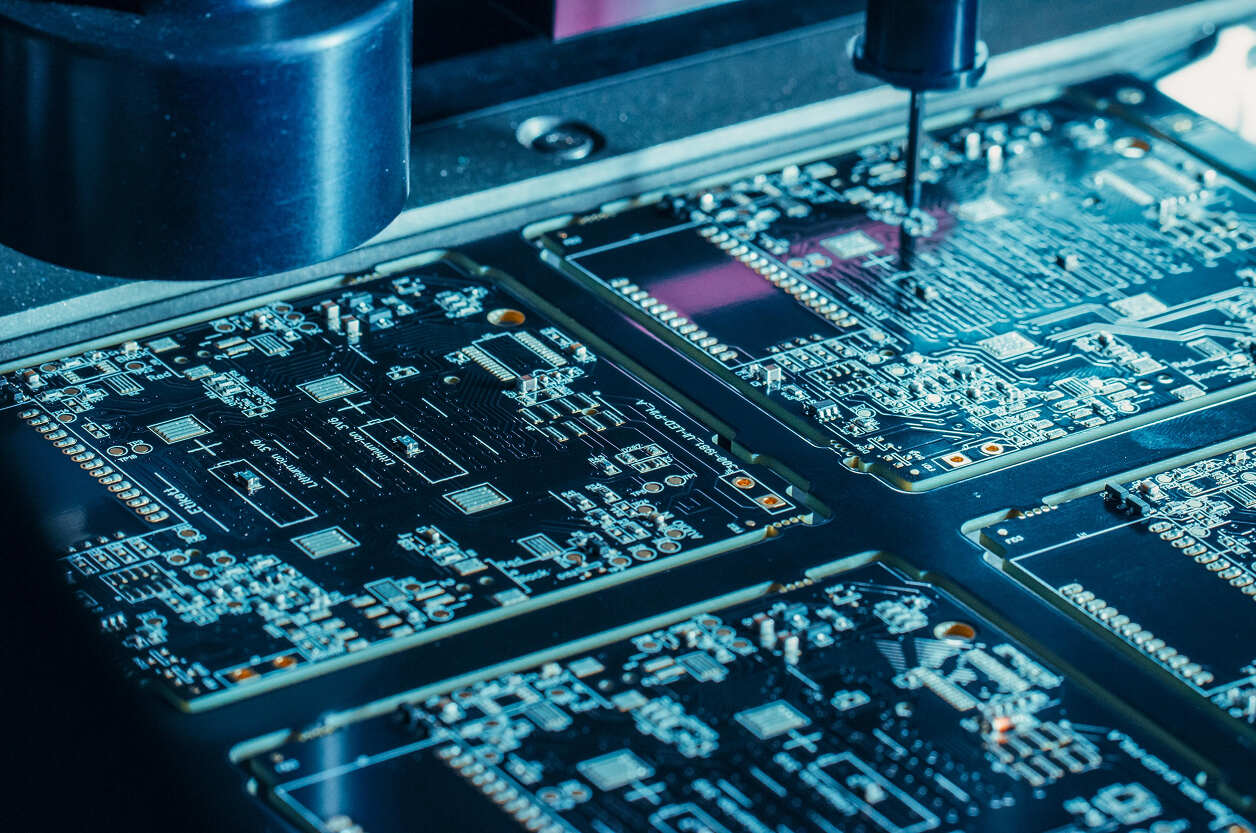

Real-world examples and prototypes

- Renesas and TSMC hybrid MCU: integrates embedded MRAM with SRAM for IoT and automotive applications, enabling instant wake-up and data persistence.

- Everspin MRAM + ReRAM demonstrator: combines non-volatile memories in layered stacks for mixed read/write workloads.

- IBM in-memory ReRAM arrays: used for analog neural network inference directly inside the memory cell matrix.

- CEA-Leti adaptive controllers: dynamically shift between MRAM and SRAM to optimize for temperature, load, and voltage conditions.

These systems show how heterogeneous memory integration is not theoretical — it’s already shaping the next generation of embedded AI SoCs.

Applications across industries

- Automotive: adaptive memory reduces latency for driver monitoring and instant boot in ECUs.

- Industrial IoT: edge controllers maintain state through power interruptions.

- Healthcare wearables: store biometric trends locally without draining power.

- Consumer electronics: enable energy-efficient always-on experiences (voice, gesture, proximity).

- Aerospace and defense: non-volatile MRAM ensures data survival under radiation or power loss.

In all these applications, the combination of SRAM, MRAM, and ReRAM provides the right balance between speed, reliability, and efficiency.

Design challenges

Integrating multiple memory technologies in one SoC introduces complexity:

- Process compatibility: MRAM and ReRAM often require different fabrication steps.

- Thermal stability: ReRAM’s resistive states can drift with temperature.

- Write asymmetry: ReRAM and MRAM have different endurance behaviors.

- Controller overhead: adaptive management logic adds design and verification cost.

Still, foundries are addressing these challenges with 3D stacking, BEOL integration, and unified design kits, making hybrid memories viable even at 22 nm and below.

Outlook: memory as a living system

By 2030, adaptive memory hierarchies will redefine how embedded systems think about storage. Instead of static layers (cache → RAM → Flash), future devices will treat memory as a fluid resource — dynamically morphing based on context, power state, and AI workload.

As MRAM and ReRAM mature, their integration with traditional SRAM will enable smart, self-optimizing memory fabrics that balance performance and persistence on the fly.

This isn’t just about new memory types — it’s about memory intelligence. The same AI models running on chips will help manage their own data flows, closing the loop between compute and storage.

The result: smarter, faster, and greener embedded systems, ready for the next decade of edge innovation.

AI Overview: Adaptive Memory Hierarchies

Adaptive Memory Hierarchies — Overview (2025)

Combining SRAM, MRAM, and ReRAM enables dynamic, power-efficient memory architectures tailored for edge AI and embedded systems. These hybrid hierarchies merge speed, persistence, and endurance to replace static DRAM-Flash designs.

Key Applications: edge AI processors, automotive ECUs, industrial controllers, wearables, and low-power SoCs.

- Benefits: reduced power consumption, instant-on functionality, long data retention, and in-memory computing capability.

- Challenges: fabrication complexity, controller design, and endurance balancing.

- Outlook: by 2030, adaptive memory fabrics will become the foundation of energy-aware computing, where memory and logic cooperate for autonomous optimization.

- Related Terms: MRAM, ReRAM, in-memory computing, hybrid SoC design, non-volatile memory integration.

Our Case Studies