Seeing the World Differently: How FPGA and Event-Based Vision are Transforming Robotics

The Challenge of Real-Time Vision

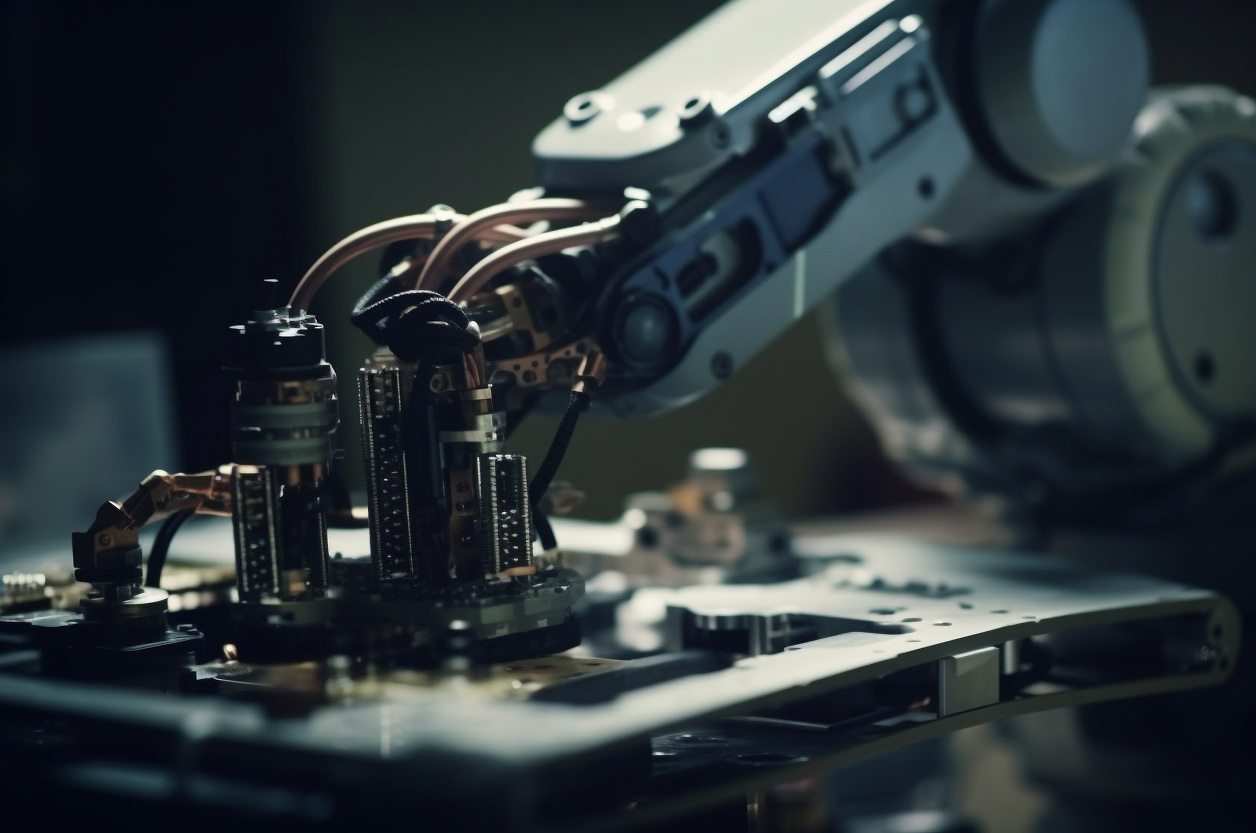

Modern robots see far more than humans ever could — but they also have to react much faster.

Whether it’s a factory arm sorting parts or a drone navigating obstacles, every millisecond counts.

Conventional vision systems rely on frame-based cameras that capture entire images dozens of times per second. While effective, this approach generates massive data streams, most of which are redundant. A static background or steady light still produces full frames, wasting bandwidth and power.

The result? High latency, heavy computation, and a constant struggle to process data in real time.

To overcome these limitations, the industry is turning to a radically different approach — event-based vision powered by FPGAs.

From Frames to Events

Unlike traditional cameras that record frames at fixed intervals, event-based cameras capture changes — or “events” — in light intensity at the pixel level.

Each pixel operates independently and reports data only when it detects movement or a change in brightness.

Instead of 60 frames per second, you get a continuous, asynchronous stream of relevant visual information — much closer to how the human retina works.

This model drastically reduces data volume and response time. It allows systems to react in microseconds, not milliseconds — a game-changer for robotics, drones, and industrial automation.

Why FPGA is the Perfect Match

Event-based vision systems demand massive parallel processing, ultra-low latency, and energy efficiency — qualities that CPUs and GPUs often struggle to deliver simultaneously.

FPGAs (Field-Programmable Gate Arrays) are ideal for this task. They process multiple data streams in parallel, can be customized at the hardware level, and achieve real-time performance with minimal delay.

Key advantages include:

– Deterministic latency: predictable, consistent performance.

– Parallelism: thousands of simultaneous operations across logic blocks.

– Flexibility: reconfigurable logic for evolving algorithms and sensors.

– Energy efficiency: far lower power consumption than GPUs for equivalent throughput.

In robotics, where timing and reliability are critical, these traits make FPGAs the hardware foundation of next-generation vision systems.

Inside the FPGA Vision Pipeline

An FPGA-based vision system typically consists of several processing layers:

- Sensor interface: handles raw event data from the neuromorphic camera.

- Event filtering: removes noise and aggregates signals from multiple pixels.

- Feature extraction: identifies edges, motion vectors, or spatial-temporal patterns.

- AI or rule-based logic: interprets events to trigger robotic actions.

- Output control: sends commands to motors, actuators, or higher-level controllers.

Each stage operates concurrently, with data flowing directly through dedicated hardware paths.

This hardware-level parallelism delivers microsecond response times — essential for applications like robotic vision and autonomous navigation.

The Rise of Neuromorphic Sensors

Event-based cameras, also known as neuromorphic sensors, mimic biological eyes.

Instead of producing uniform frames, they encode intensity changes as spikes — similar to how neurons fire in response to stimuli.

This approach is not only fast but also efficient.

For static scenes, event activity drops almost to zero, conserving energy and bandwidth.

For dynamic environments, activity spikes in proportion to motion — ensuring high responsiveness exactly when it’s needed.

When paired with FPGA logic, these sensors can achieve end-to-end processing times below 1 millisecond. That’s faster than human reflexes.

Why It Matters for Robotics

Robots operate in unpredictable environments. Their ability to perceive and act quickly determines both performance and safety.

Event-based vision combined with FPGA processing provides several key advantages:

– Instant reaction to motion: no waiting for the next frame.

– Low power consumption: crucial for drones and mobile robots.

– Resilience to lighting changes: only intensity variations matter.

– Compact and scalable design: fewer data bottlenecks in embedded systems.

Imagine a robotic arm that detects a falling object midair or a drone that avoids a fast-moving obstacle in real time. These scenarios require vision that works as fast as physics allows — and that’s exactly what event-based FPGA systems deliver.

From Research Labs to Factory Floors

Once limited to academic prototypes, event-based cameras are now making their way into production.

In industrial automation, they detect defects on high-speed conveyor lines, measure micro-vibrations in rotating machinery, and track motion without blur.

In autonomous vehicles, they improve perception in low-light or high-contrast environments.

In drones, they enable ultra-fast navigation and stabilization without overloading onboard processors.

The shift is clear: what began as a niche neuromorphic concept is now becoming an engineering standard for real-time robotics perception.

Hybrid Systems: Combining Events and Frames

Despite their advantages, event-based cameras don’t fully replace frame-based systems yet. Instead, the two are increasingly combined into hybrid vision architectures.

FPGAs play a crucial role here as well, handling sensor fusion in real time:

– Integrating events with frame data for spatial context.

– Running object recognition models on top of low-latency event streams.

– Balancing the strengths of both modalities — temporal resolution and image clarity.

This fusion provides a holistic view of the environment, enhancing reliability for robots that need both precision and speed.

Software Stack and Toolchains

Developing FPGA-based vision pipelines requires specialized design flows.

High-Level Synthesis (HLS) tools now allow engineers to describe algorithms in C/C++ or Python and compile them into optimized hardware logic.

Frameworks like OpenCL, Vitis Vision, and OpenCV FPGA modules make it easier to integrate computer vision tasks — such as optical flow or motion detection — directly into programmable logic.

Combined with AI frameworks (TensorFlow Lite, PyTorch Edge), these tools bridge the gap between machine learning and embedded design — making it possible to build adaptive vision systems at scale.

Challenges in FPGA Vision Development

Despite rapid progress, implementing FPGA-based event vision systems still presents challenges:

– Complexity of development: hardware description requires specialized expertise.

– Data sparsity: event streams differ from conventional image formats, demanding new AI architectures.

– Debugging difficulty: real-time data at microsecond scale is hard to visualize and analyze.

– Cost and power trade-offs: high-end FPGAs provide performance but may exceed small system budgets.

Overcoming these challenges requires collaboration between hardware, AI, and embedded software teams — exactly the kind of cross-domain integration Promwad specializes in.

Case Example: Real-Time Robotic Inspection

Imagine a high-speed assembly line where traditional cameras miss small defects due to motion blur.

An FPGA-based event camera can detect subtle vibrations or misalignments at thousands of events per second.

Each event is processed directly on-chip, classified as normal or abnormal, and immediately triggers a mechanical adjustment.

No external server, no delay, no lost data.

The result: faster inspection, higher yield, and reduced waste — all powered by embedded AI and FPGA acceleration.

The Power of Local Intelligence

The big picture goes beyond cameras.

FPGA-powered perception is part of a broader trend — bringing intelligence to the edge.

As robots become more autonomous, central processing gives way to distributed cognition. Each sensor, actuator, and vision module gains its own “micro brain,” capable of understanding and reacting locally.

This modular intelligence makes robotic systems more robust, scalable, and adaptable to complex tasks.

What’s Next: Vision That Learns and Adapts

The next generation of event-based vision will integrate adaptive learning directly on hardware.

FPGAs with built-in AI engines can update inference models on the fly, adapting to changing environments without cloud retraining.

Neuromorphic sensors will gain dynamic sensitivity control, mimicking the human eye’s ability to focus on relevant movement.

These advancements will enable robots that not only see but understand — interpreting depth, speed, and intent in real time.

Why It Matters for Industry

Industries are under pressure to automate faster, smarter, and safer.

FPGA-powered vision enables that shift by combining speed, precision, and energy efficiency.

From robotic welding to autonomous drones, from smart inspection to warehouse automation — event-based perception opens a new frontier for machines that act with near-human reflexes but superhuman consistency.

AI Overview

Key Applications: real-time robotics perception, industrial inspection, drone navigation, autonomous vehicles, and high-speed manufacturing systems.

Benefits: ultra-low latency, efficient data processing, energy savings, resilience to motion blur, and scalable embedded implementation.

Challenges: complex FPGA design, data sparsity in event formats, sensor calibration, and model portability.

Outlook: FPGA-powered event-based vision is redefining robotic perception — merging neuromorphic sensing with hardware acceleration for machines that see and think in real time.

Related Terms: embedded vision, neuromorphic camera, FPGA acceleration, edge AI, sensor fusion, low-latency processing.

Our Case Studies