Embedded Systems Trends 2026: Chiplets, RISC-V Expansion, and Edge Generative AI

Embedded systems move fast, but 2026 brings a different kind of transition — not just faster silicon or new toolchains, but a shift in how devices are architected and how intelligence is deployed at the edge. The themes emerging across automotive, industrial automation, IoT, and consumer electronics are consistent: modular hardware, open architectures, and smarter devices that can run advanced models locally without depending on the cloud.

Below we explore the trends that will define the next generation of embedded systems — and the practical engineering realities behind them.

Many of the architectural shifts visible in 2026 build directly on trends that were already taking shape a year earlier. In 2025, embedded development focused on integrating AI into edge devices, strengthening IoT security, reducing power consumption, expanding edge computing, and exploring RISC-V as an alternative architecture. These themes defined how teams approached embedded software and hardware design at the time and set the stage for deeper transformation. A snapshot of that phase can be seen in an overview of the top embedded systems trends for 2025, which captures the industry as it moved from incremental optimization toward more intelligent and connected devices. In 2026, those trends mature into platform-level architectural decisions involving modular silicon, open instruction sets, and locally deployed generative AI.

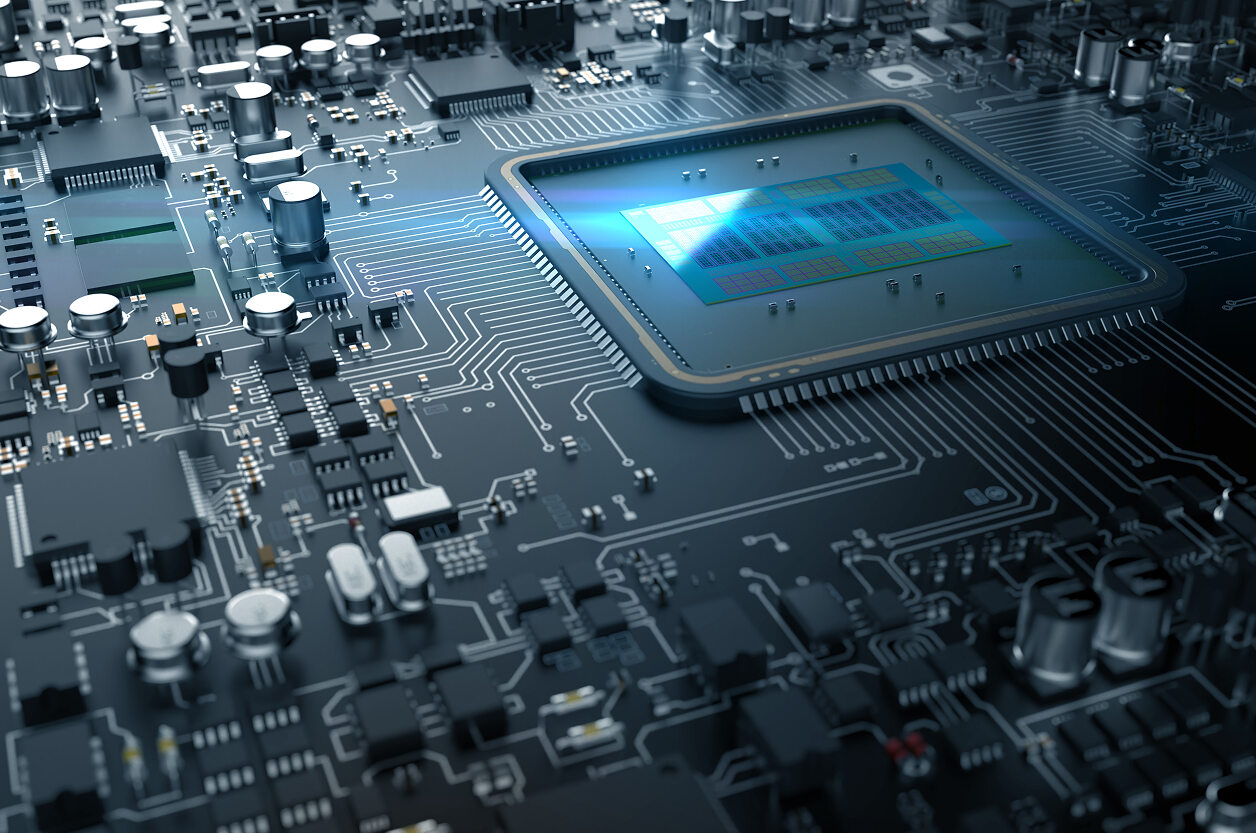

Chiplet-Based Embedded Architectures Become Mainstream

Chiplet architectures are finally moving from high-end server silicon into embedded systems. This shift is driven by the growing need to mix compute blocks — MCU cores, DSP units, NPUs, connectivity modules — without waiting for monolithic SoCs to catch up.

Instead of one large piece of silicon, engineers work with modular compute tiles that can evolve independently.

This is what makes chiplets relevant for embedded hardware in 2026: the ability to scale performance and features while keeping predictable cost, power, and supply chain planning.

What makes chiplets practical for embedded systems?

- they reduce time-to-market because individual chiplets can be upgraded without redesigning the full SoC

• they support mixing low-power control cores with AI accelerators or DSP tiles

• they offer better thermal control in compact enclosures

• they introduce clearer migration paths when planning product families

Chiplets solve a real problem: device complexity is increasing, but redesign cycles cannot stretch into years. Modular silicon lets teams grow capabilities without resetting the entire hardware stack.

Long-tail questions teams ask in 2026

These already appear in technical searches and engineering discussions:

- how do chiplets impact embedded real-time scheduling?

• does chiplet interconnect latency break hard real-time guarantees?

• which embedded vendors are releasing chiplet-ready SoCs?

These questions indicate the transition is no longer conceptual — engineers are actively evaluating chiplet-based platforms for upcoming devices.

RISC-V Expansion in 2026: From Curiosity to Core Architecture

RISC-V adoption accelerates sharply in 2026. The difference from earlier years is the maturity: we now see real, production-grade chips powering industrial controllers, consumer devices, and early automotive modules.

Vendor roadmaps reveal two strong directions:

- ultra-low-power RISC-V MCUs optimized for IoT and sensor nodes

- mid-range and high-performance designs with vector extensions for DSP and AI

This is the first moment where RISC-V offers a credible alternative to Arm not only in basic MCUs, but across large parts of embedded portfolios.

Why RISC-V is gaining momentum now

- open ISA reduces long-term licensing exposure

• vendor-neutral toolchains make migration less risky

• rapid ecosystem growth improves RTOS, debugging, and ML support

• vector instruction extensions make RISC-V attractive for real-time AI workloads

For companies designing 7–10-year product lifecycles — such as automotive, industrial, and medical — this stability is critical.

Common decision-making questions in 2026

- how to evaluate RISC-V vs Arm for long-lifecycle embedded products?

• is RISC-V ready for safety standards such as ISO 26262?

• what do vector extensions enable in AI/digital signal processing applications?

These reflect real engineering decisions around future-proofing architectures.

Edge Generative AI Arrives on Embedded Hardware

The biggest functional shift in 2026 is edge generative AI. Device manufacturers no longer treat generative models as cloud-only functionality. Small, optimized models now run directly on NPUs, DSPs, hybrid SoCs, and even high-end MCUs.

This unlocks new capabilities: devices can interpret, generate, transform, and enhance data locally without relying on a network connection.

What edge generative AI enables in embedded devices

- speech enhancement and noise cleanup directly on MCUs or NPUs

• generative reconstruction for industrial sensors (e.g., filling gaps in noisy vibration signals)

• advanced anomaly detection models running in real time

• adaptive UI elements generated locally based on usage patterns

• low-light video enhancement directly inside smart cameras

Generative AI moves from the cloud to the field, reshaping how products behave in offline or low-bandwidth environments.

Typical search questions around the trend

- how to deploy generative AI models on low-power embedded hardware?

• which NPUs support transformer-based inference at the edge?

• what is the minimum memory footprint for edge generative AI?

These are the questions product teams must answer as they plan new devices for 2026–2027.

Hybrid Architectures: AI Acceleration Meets Real-Time Control

With chiplets and AI accelerators becoming common, embedded products increasingly rely on hybrid system designs:

- a real-time MCU or RTOS kernel handles timing, safety, and device control

• a Linux or POSIX subsystem runs AI models, connectivity, and multimedia

• NPUs and DSPs handle inference, compression, and sensor processing

• secure elements manage encryption, identities, and access control

• power domains adjust independently to limit consumption

This architecture is becoming the standard model for devices that require real-time determinism combined with AI-driven enhancement — from robotics and smart cameras to automotive HMIs and industrial gateways.

Developers designing these systems face new practical questions:

- how to guarantee timing predictability in mixed AI + RTOS architectures?

• how to isolate AI workloads without affecting control loops?

• how to ensure power efficiency across heterogeneous compute units?

These considerations shape the design process across multiple industries.

Power Efficiency as a Design Priority — Not a Constraint

As AI workloads grow, power budgets remain strict. 2026 devices rely on a combination of:

- low-power AI modes

• event-driven wake-up logic

• chiplet-level power gating

• specialized accelerators tuned for transformer inference

• sensor-fusion pipelines optimized for partial computation

The industry’s direction is clear: more intelligence, but without compromising runtime or thermal stability.

Looking Ahead: What 2027–2028 May Bring

Based on current roadmaps, several trends are already forming:

- chiplet marketplaces enabling configurable SoCs for embedded manufacturers

• automotive-ready RISC-V families supporting functional safety from the start

• sub-50mW transformer models optimized for wearables

• tighter unification between MCU firmware, NPU runtimes, and ML compilers

• embedded systems shifting toward “AI-priority” rather than “AI-optional” designs

These moves reflect a broader shift: embedded systems are becoming platform-level ecosystems, not standalone boards or microcontrollers.

AI Overview

Embedded systems in 2026 evolve around three major pillars: chiplet-based architectures that make hardware more modular, a maturing RISC-V ecosystem moving into automotive and industrial markets, and the emergence of edge generative AI capable of running directly on compact SoCs and NPUs. These shifts redefine how developers design devices, enabling more flexible hardware planning, stronger long-term lifecycle management, and more intelligent on-device processing. The direction for 2026 is clear: embedded devices become smarter, more modular, and far more capable at the edge.

Our Case Studies