The Future of AV-over-IP: Key Technologies Driving the Industry Forward

Head of Video Streaming Unit at Promwad

The transition from traditional AV connections to Ethernet and IP-based networking revolutionises the multimedia and broadcast industry. This shift unlocks greater scalability, flexibility, and remote management, helping media systems adapt to modern productiondemands.

Though established standards like HDMI, DisplayPort, and SDI remain essential for endpoints, AV-over-IP solutions (ST 2110, IPMX, NDI and Dante) are gaining traction — driving interoperability, efficiency, and cost savings.

Key advantages include:

- Cost reduction. Cabling and installation expenses can drop up to 50% (Jupiter Research).

- Remote workflows. Cloud-compatible production becomes seamless.

- Future readiness. Open standards ensure long-term adaptability.

However, with competing protocols (ST 2110, IPMX, NDI, Dante), choosing the right technology requires careful evaluation. This guide explores critical standards, practical tradeoffs, and implementation strategies to align your AV infrastructure with business objectives.

The Evolution of AV Standards

From Baseband AV to IP-Based Media Transport

Despite the rise of AV-over-IP, traditional baseband AV connections are still widely used, particularly at endpoints. HDMI and DisplayPort continue to evolve, supporting higher resolutions and advanced formats:

- HDMI 2.1 enables 8K UHD video and remains a dominant standard in consumer electronics.

- DisplayPort 1.4 & 2.1 offer high-resolution support, commonly used in desktop monitors and computing setups.

- SDI (Serial Digital Interface) remains the standard in broadcasting, providing reliable point-to-point connections for cameras, monitors, and other equipment. The different generations of SDI are 3G-SDI (bandwidth of 2.97 Gbps, 1080p video at 60 fps), 6G-SDI (bandwidth up to 5.94 Gbps, 4Kvideo at 30 fps) and 12G-SDI (data rates up to 11.88 Gbps, supporting 4K video at 60 fps).

However, as production workflows become more complex and shifted into the cloud, these traditional standards struggle to meet the demands of scalability, flexibility, and remote operation — paving the way for AV-over-IP solutions.

Ethernet: The Backbone of AV-over-IP

Ethernet has emerged as the preferred transport for professional AV applications, offering:

- Scalability – Supports various data rates, accommodating different video quality requirements.

- Flexibility – Allows multiple audio and video streams over a single network.

- Standardisation – Ensures interoperability between different vendors and systems.

To meet diverse AV transport needs, designers have multiple implementation options:

1. Integrated Ethernet ports

Many modern processors and system-on-chip (SoC) solutions come with built-in Ethernet, reducing complexity. 10G, 25G, and 100G Ethernet connectivity are high-speed network standards designed for data-intensive applications such as video production, broadcasting, and cloud-basedmedia workflows. Choosing the proper Ethernet standard depends on video resolution, bit depth, chroma subsampling, and real-time processing needs in modern media infrastructures.

10G Ethernet (10 Gbps) is commonly used in professional video production but has limitations for uncompressed 4K workflows. It supports 1080p60 10-bit 4:2:2 RAW video (~6 Gbps), but 4K60RAW (~12 Gbps) exceeds its capacity, requiring compression or dual-link connections.

25G Ethernet (25 Gbps) provides higher bandwidth, allowing 4K60 10-bit 4:2:2 RAW video (~12Gbps) to be transmitted reliably with headroom for metadata and additional streams. It is also suitable for 8K30 10-bit 4:2:2 (~24 Gbps) workflows.

100G Ethernet (100 Gbps) is essential for 8K60 RAW video (~48 Gbps for 10-bit 4:2:2, ~96 Gbpsfor 12-bit 4:4:4), offering ample bandwidth for multi-stream workflows, high-frame-rate video, and real-time uncompressed processing in high-end broadcasting and post-production environments.

2. Programmable Logic (PL) Integration

Adaptive SoCs and FPGAs can handle custom configurations and high data rates. When implementing Ethernet-based AV transport, key considerations include network bandwidth, latency control (QoS), and optimal network topology to prevent congestion. This is addressed by IEEE802.1Q (VLAN & QoS), IEEE 802.1BA (AVB – Audio Video Bridging), and SMPTE ST 2110 (Professional Media over IP) standards.

AMD FPGAs are reconfigurable semiconductor devices that enable custom hardware acceleration across multiple industries.

One of its application areas includes broadcast & pro AV, thanks to ultra-low latency 4Kvideo processing, IP media gateways (ST 2110/IPMX), and compression (HEVC).

Advantages:

- High performance - 7nm/5nm Versal ACAPs combine FPGA logic with AI Engines and scalar processors.

- Nanosecond-level response for real-time systems.

- 30-50% lower power vs. GPUs for targeted acceleration.

- In-field reconfiguration for future-proof designs.

NDI: Simplifying Professional AV Workflows

NDI® (Network Device Interface) is a widely used AV-over-IP protocol designed for liveproduction, streaming, and broadcast workflows. It streamlines IP-based video transport by automating device discovery and simplifying connectivity.

Optimising Bandwidth with NDI

NDI offers multiple formats to balance bandwidth efficiency and video quality:

- NDI High Bandwidth – Uses SpeedHQ (SHQ) for broadcast-quality video.

- NDI|HX – Uses H.264/H.265 compression, reducing bandwidth usage by 90% compared to SHQ.

- NDI|H3 – A hybrid solution offering near-SHQ quality at 80 Mbps.

These formats allow multiple high-quality streams to operate efficiently over a 1 Gbps networkwith low latency.

Beyond Video: NDI’s Additional Features

NDI doesn’t just carry audio and video; it supports bi-directional data transfer, enabling:

- Tally indicators to show which cameras are live.

- PTZ (pan-tilt-zoom) controls for remote camera operation.

- Audio return channels for real-time communication.

With NDI tools and software integrations (such as Adobe Premiere and After Effects), this protocol has become a go-to solution for professionals seeking a scalable, software-based AVover-IP workflow. Many professional PTZ camera brands, including Panasonic, Sony, BirdDog, PTZOptics, and NewTek, provide native NDI support or models with NDI|HX (compressed NDI) capabilities.

ST 2110 & IPMX: The New Standards for Broadcast and Pro AV

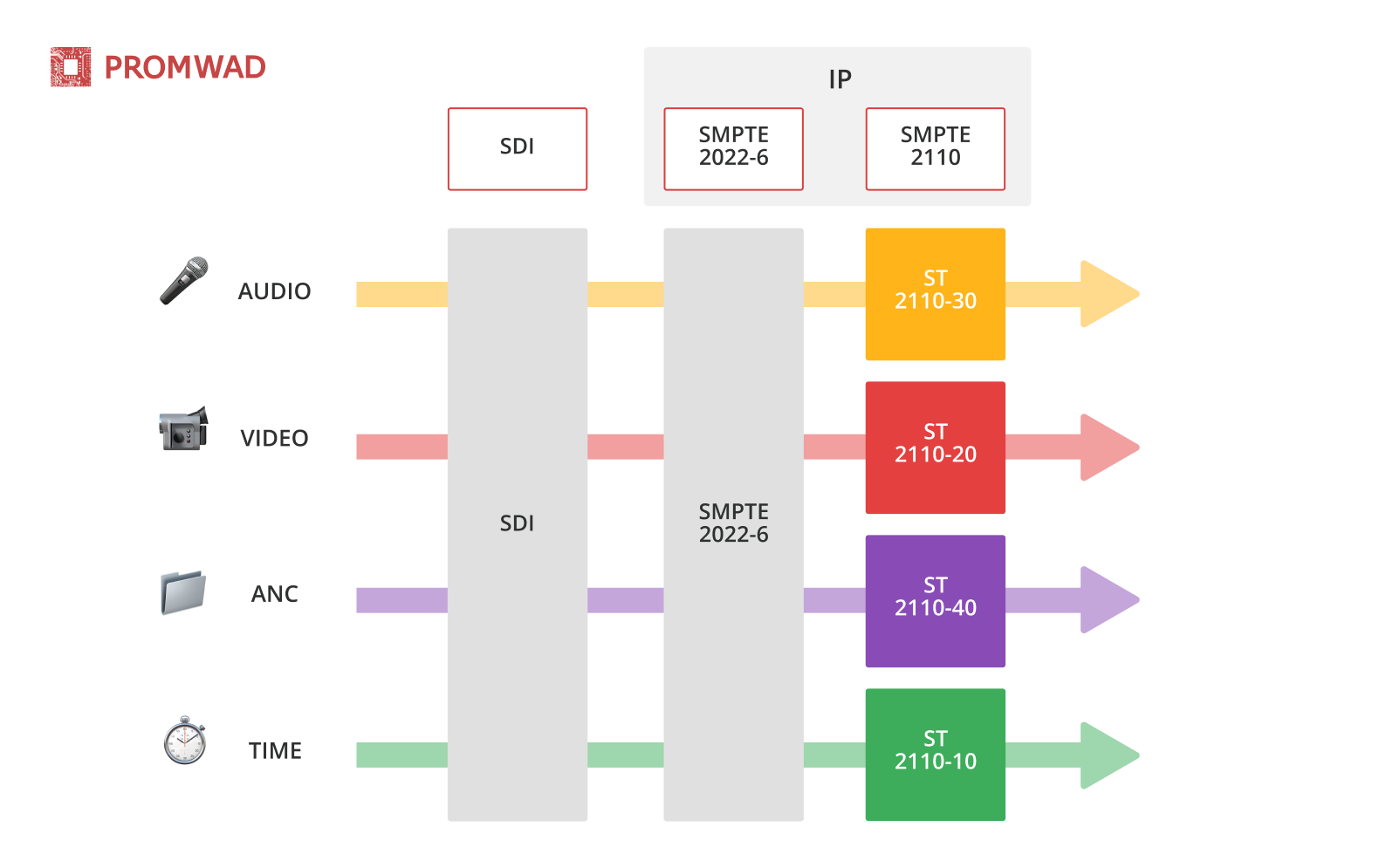

ST 2110: Transforming Broadcast Media Transport

The SMPTE ST 2110 standard is now the industry benchmark for broadcast AV-over-IP solutions. It enables the independent transmission of audio, video, and metadata over a network, ensuring:

- Greater flexibility in routing and signal processing.

- Precision timing with IEEE 1588 PTP (Precision Time Protocol).

- Scalability for future-proof media production workflows.

System management is facilitated by Networked Media Open Specifications (NMOS).

Key parts of the SMPTE ST 2110 standard and their roles

- SMPTE ST 2110-10 – system timing & synchronisation

Defines how different streams (video, audio, metadata) synchronise over an IP network. PTP(Precision Time Protocol - IEEE 1588-2008) ensures frame-accurate synchronisation. - SMPTE ST 2110-20 – uncompressed video transport

Specifies the transport of high-bitrate uncompressed video over IP networks. Supports 4:2:2 and 4:4:4 chroma subsampling, with resolutions up to UHD/4K and beyond. It uses a real-time transport protocol (RTP) for data encapsulation.

Comparison between SMPTE 2110, SDI, and SMPTE 2022-6

- SMPTE ST 2110-21 – traffic shaping & buffering for video

Establishes packet timing and buffering rules to prevent jitter and congestion in IP networks. Defines constant bit rate (CBR) and variable bit rate (VBR) constraints for smooth video streaming. - SMPTE ST 2110-22 – compressed video transport (optional)

Allows compressed video formats (e.g., JPEG XS) to reduce bandwidth while maintaining lowlatency. Useful for high-resolution workflows that require lower network load. - SMPTE ST 2110-30 – audio transport

Defines how PCM-encoded audio (AES67-compatible) is transmitted as separate streams. Enables ultra-low latency, synchronised multi-channel audio transport. - SMPTE ST 2110-31 – AES3 transparent audio transport

Supports non-PCM audio formats, such as Dolby E, over IP networks. Enables transmission of metadata-rich audio for professional broadcasting. - SMPTE ST 2110-40 – ancillary data transport

Covers the transport of metadata, captions, subtitles, and timecode over separate streams. Ensures compatibility with traditional SDI workflows by supporting SMPTE ST 291 metadata.

This standard is widely supported by significant industry associations such as AIMS, SMPTE,EBU, AMWA, and VSF, ensuring broad interoperability and adoption in broadcast facilities.

IPMX: Bringing ST 2110 to Pro AV

IPMX (Internet Protocol Media Experience) is an open standard developed to address the specific needs of the professional AV industry, building upon the foundation of SMPTE ST 2110, which is primarily designed for broadcast environments. While both standards aim to facilitate media transport over IP networks, they differ in several key areas to accommodate the distinct requirements of their respective industries.

Key differences between IMPX and SPMTE ST 2110:

- IPMX is an extension of ST 2110 tailored for the professional AV industry, addressing the specific needs of environments like corporate spaces, educational institutions, and live events.

- IPMX offers optional PTP synchronisation, accommodating both synchronous andasynchronous sources, which benefits diverse AV environments.

- IPMX is designed with enhanced interoperability, leveraging open standards and profiles to ensure seamless integration across various AV equipment and applications.

- IPMX incorporates comprehensive control and management features, including NMOS-based device discovery and connection management, stream compatibility, and support for HDMI Info Frames, facilitating easier system integration and operation.

- IPMX introduces the Privacy Encryption Protocol (PEP), which supports HDCP-compliant key exchange for secure content delivery and addresses the need for content protection in AV environments.

In summary, while SMPTE ST 2110 serves as a robust standard for broadcast media transport, IPMX extends its capabilities to meet the specific needs of the professional AV industry, incorporating features like compression support, HDMI compatibility, and flexible synchronisationoptions to facilitate seamless integration across diverse AV environments.

IPMX Standard Components Breakdown

| Component | Role in IPMX | Key features | Use case |

| ST 2110 | Media transport foundation | - Uncompressed/compressed video streams - Precision timing (PTP) -Interoperability with AES67-compliant audio devices - Ancillary data support | 4K OB vans, IP-based studios |

| NMOS | Device management & control | - Device discovery, registration, and connection management - Open APIs for system configuration and control | Automated routing, multi-vendor systems |

| HDCP, PEP | Security & Content Protection | - Secure transmission of protected content over IP networks - Secure multicast andunicast media distribution - HDCP 2.3 compliance | Live events, corporate boardroom setup |

| HDMI | Consumer Device Integration | - Integration with consumer-grade equipment - HDMI 2.0/2.1 support | Conference or educational rooms, video walls |

| USB & GPIO Support | Control Interfaces | - Extended control capabilities | Live event production |

Case Studies

NMOS integration in general purpose system with Web API

A video capture and playback device is an example of a development that could benefit from NMOS-based data exchange. Source: AVer Media

We developed a Web API-based system that needed to support SMPTE ST 2110-compliant control through NMOS. To build the NMOS node for our system, our engineers utilised nmos-cpp, an open-source library licensed under Apache 2.0. Then, we implemented platform-specific code for authorisation and integration with our Web API.

When retrieving a list of active streams, the process involves the following steps:

- The user application sends a request to the NMOS Node running on the device.

- The NMOS API processes the request, fetching the relevant data from the Web API.

- The response travels back along the same path to the user application.

This method enables seamless integration of NMOS into existing infrastructures, facilitating efficient communication between devices and NMOS-based systems.

Designing software for a PRO camera within an NMOS-enabled networked mediasystem

A professional NMOS-enabled camera must support several key features and standards to ensure full interoperability within an NMOS ecosystem.

We ensured support for the following:

- NMOS standards: IS-04 (discovery), IS-05 (connection management), and IS-07 (event/tally).

- Remote camera control for resolution, exposure, framerate, sensor parameters, etc.

- Secure communication with authentication and authorisation.

- RESTful Web API, compliant with NMOS for device management.

- PTP time synchronisation for accurate stream timing.

Virtual audio mixer

Photo for illustration purposes only

We created a cross-platform Qt-based application that is compatible with MacOS, Windows,and Linux.

What our solution does:

- Mixes 6 inputs to 4 outputs across 4 buses for versatile audio source mixing. IP calls and radio,music, and video can be mixed and sent to various audio outputs.

- Supports 8 mono microphones, 2 stereo hardware channels, and 12 stereo virtual channels simultaneously.

- Compatible with pro audio DAWs and multiple audio interfaces: MME, WaveRT, WASAPI,Direct-X, KS, and ASIO.

- Enables connections to musical instruments and supports VST/VST3 plugins.

A platform for OTT streaming

We offer off-the-shelf server software tailored for operators and broadcasters who aggregate video streams on their servers and distribute them to end users.

What our solution does:

- Receives and transcodes video streams from various sources.

- Creates adaptive bitrate profiles for seamless playback across devices.

- Serves subscribers via TV, set-top boxes, mobile apps, and web platforms.

- Monitors all transmitting and receiving devices in real-time.

- Manages device settings and configurations centrally.

- Handles user authentication, session control, and access rights.

- Enables monetisation through banner ads, subscription and pay-per-view models, commercial partnerships and sponsored content.

Additionally, the system supports regional content delivery, allowing operators to receive and manage streams from CCTV cameras and other sources across distributed locations. This ensures flexible deployment in both urban and remote environments.

Application for PRO camera

Photo for illustration purposes only

We designed a Linux Qt-based application to manage essential camera settings directly from an embedded display. The application offers a user-friendly interface that allows operators to adjust key parameters such as exposure, white balance, focus, and zoom in real-time. Built for embedded Linux platforms, it ensures efficient performance and seamless integration with the camera hardware. This solution simplifies on-site camera control, reducing the need for external devices and improving workflow efficiency.

Additionally, we developed a web application that runs on an HTTP server within the hostdevice. The web application has a unified interface, ensuring a seamless and consistent user experience. Users can access the web application online once they connect a host device to the Internet. The software enables the control of multiple cameras, including all settings, and facilitates the loading and saving of predefined configurations.

AI-powered content analysis and filtering

We created an advanced neural model utilising YOLO 5 and 8 to recognise distinct actions in video streams, such as smoking, mobile phone use, and mask-wearing.

- The model was trained on more than 12K labelled images and fine-tuned to cut processing latency by ten times.

Our solution facilitates targeted advertising filtration and enables video censorship and content categorisation.

Why Promwad

We specialise in end-to-end development for broadcast technology, offering:

- Software solutions for audio/video processing and broadcasting systems.

- Hardware and firmware engineering for embedded media devices.

- Custom UI/UX design tailored to professional workflows.

Our solutions power:

- Broadcast dashboards & control panels

- LCD management systems

- Professional video editing tools

- Remote production interfaces

- Real-time video/audio processing

Conclusion: The Future of AV-over-IP

The move to Ethernet-based AV transport is revolutionising the industry. While traditional AV standards still play a role, IP-based solutions drive the future, offering scalability, flexibility, and long-term interoperability.

- ST 2110 dominates in broadcasting, offering precision, scalability, and interoperability.

- IPMX bridges the gap between broadcast and Pro AV, making high-end media transport more accessible.

- NDI and Dante provide software-driven live production, streaming, and audio networking solutions.

As AV-over-IP technology continues to evolve, the focus will remain on improving efficiency, reducing costs, and ensuring seamless connectivity across diverse media environments. The future of broadcast and AV is firmly rooted in IP-based workflows, setting the stage for greater innovation and integration in the years to come.

Ready to transition to AV-over-IP? Promwad offers free workflow audits to align the correct protocol with your needs.

Our Case Studies in Video Streaming