CXL Technology: A Scalable Solution to Increase Data Center Performance

By Denis Petronenko, Head of Telecom Unit at Promwad. Here, we share his guest column published in the thefastmode.com, leading independent research and media brand, providing breaking news, analysis and insights for global IT/telecommunications sector.

As AI models become more complex, they need more memory and faster processing speeds. Training AI models like ChatGPT consumes as much energy as thousands of households. Data centers account for about 1-1.5% of global electricity use, according to the International Energy Agency (IEA).

Data centers can't always keep up with the load and need smarter solutions. We prove that CXL technology can help to overcome data and energy challenges.

What is CXL, and how does it manage data?

Engineers aim to build scalable systems, like supercomputers, that can handle any task. NVIDIA dominates the market thanks to its scalable architecture based on GPUs for complex tasks and active use of open-source code. However, this is not the final solution. NVIDIA's success is driven by the high demand for computing resources, AI, cryptocurrencies, and cloud services, but it is built on its proprietary architecture.

CXL, which stands for Compute Express Link, provides a flexible and scalable alternative. It is an open standard for connecting CPUs, devices, and memory at high speed and capacity. PCI Express (PCIe) technology, which connects internal components like graphics cards and storage controllers to the motherboard, forms the foundation of CXL.

A consortium of tech companies, including Intel, AMD, and Arm, developed this technology, with Microchip Technology serving as a dominant player.

CXL is not limited to one specific tool and can work with different platforms, including NVIDIA products and other chips. It combines various tools into a single, scalable platform.

In comparison to other architectures, CXL wins in scalability. While many companies produce electronic chips, managing them with software is challenging. CXL links all nodes into one unified architecture and fine-tunes each part. Recent CXL updates include new standards and chips that make this possible. Data moves point-to-point at high speeds with minimal energy use—one of CXL’s main benefits.

Now that the CXL 3.1 specification is available, it advances previous versions with improved resource efficiency. Technology also provides secure on-demand computing environments, extends memory partitioning and pooling to minimize unused memory, and supports memory sharing between GPU accelerators.

CXL components

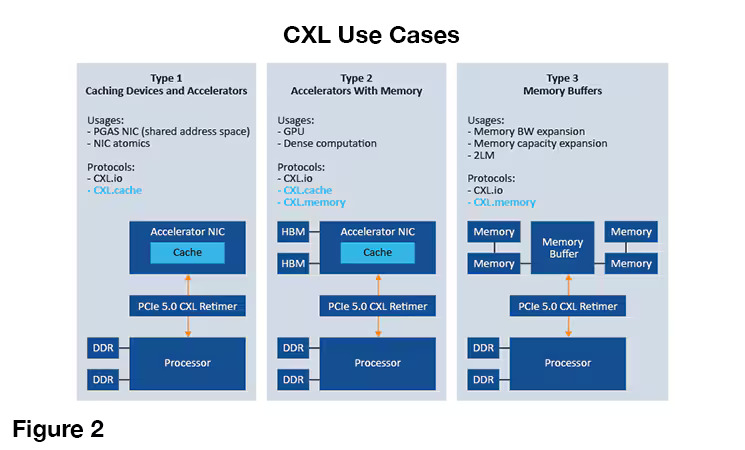

The CXL standard includes three main protocols:

- The CXL.cache allows the GPU or FPGA to store data temporarily, perform tasks, and then return it to the main processor for further data processing.

- CXL.mem configures the memory connected via CXL, simplifying its use for software as it would be with regular memory but with much less latency.

- CXL.io refers to the existing PCIe standard but with adjustments to improve performance in CXL applications.

CXL benefits

The CXL is flexible; it automatically switches between PCIe and CXL protocols depending on the needs of the connected devices. In this way, a wide range of configurations can be created with the same hardware.

What else to expect?

- Improved capacity and dynamic resource sharing

- Streamlined performance for AI/ML and analytics

- Reduced latency

- Flexible, scalable infrastructure

- Energy efficiency

- Saving budgets

- Optimized resource management

- System resilience

- Coherent memory access

- Compatibility with new technologies

With CXL, data centers get high-speed, low-latency connections between processors, memory, and network devices.

CXL use cases

CXL technology is suitable for data centers and high-performance computing.

A cloud service provider may use CXL to pool memory resources from many servers and divide them into different virtual machines. CXL streamlines AI model training, making it a must-have tool for research labs that develop AI models. In enterprises, CXL can simplify workloads and manage large-scale applications.

CXL works well for different video processing purposes, but there are certain limitations. For example, the distribution of 8K panoramic and stereoscopic images is currently limited to hardware and transportation systems. Users can tolerate a few seconds of delay to obtain high-quality images, but in practice, this can be problematic without a powerful lab setup. If a provider has a core built on a scalable system, it’s easy to offer scalable services.

Along with 8K video, you can specify critically dependent processing latency: trade/exchange/bank processing centers, crypto and billing processing centers.

Image Credit: MicroChip Technology Inc.

Comparing CXL with existing technologies

| Feature | CXL | PCIe based multy-CPU host | NVLink | OpenCAPI | Ethernet based multy-host |

| Direct CPU-to-Accelerator Communication | Yes | No (typically need targeted DPDK for Host) | Yes | Yes | No (need extra efforts and non-flexible hardware to build using RDMA/DPDK/SPDK |

| Memory Access | Shared, accelerator, CPU, network | CPU | Accelerator | Shared, accelerator, CPU | No (need extra efforts and non-flexible hardware to build using RDMA/DPDK/SPDK |

| Scalability | High | High but can become limited | High | High | High |

| Flexibility | High | High | Moderate (primarily for NVIDIA GPUs) | High | High for general networking |

| Power Efficiency | Good | Moderate | Good | Good | Moderate |

| Target Use Cases | AI, ML, HPC | General-purpose I/O | AI, ML, HPC (primarily NVIDIA GPUs) | AI, ML, HPC | General networking |

CXL provides a more efficient, flexible, and scalable solution for connecting CPUs to accelerators. Direct communication, shared memory, and higher bandwidth make it indispensable for workloads in AI/ML and high-performance computing.

High-performance computing with CXL in practice

Let's look at a specific application example that could be implemented with CXL. Providers of solutions for high-performance computing and cloud infrastructure face a challenge: the growing demand for high-speed data processing and the inability to meet it with the existing infrastructure based on traditional PCIe connections.

CXL allows designing a scalable server system to solve these problems. The hardware and software solution can be based on the COM-HPC standard and Switchtec™ Gen 5 PCIe switches by Microchip, GPUs, and Mellanox NIC PCIe cards by Nvidia.

As a result, there is a high-performance and reliable server system that withstands high loads and scales to meet growing needs. Here is an example of achievable characteristics for such a system:

- data transfer rates up to 800 Gbps;

- storage capacity up to 8192 TB;

- support for multiple devices through a single host thanks to CXL 2.0;

- reduced power consumption through optimized memory access and resource allocation.

Wrapping up

CXL technology provides data centers with a way to improve performance while lowering energy costs, positioning itself as a cost-effective alternative to the other solutions. This technology increases speed and efficiency, and it works well in cases where extensive computational power for GenAI model training is not required. Additionally, CXL helps companies reduce energy expenses and supports sustainable technology initiatives.

Our Telecom Case Studies